- View All Products

- Live Video Contribution

- Video Walls

- IPTV Distribution

- SRT Streaming

All Encoders & Transmitters

Video Encoder

Haivision Pro

Video transmitter.

Haivision Air

Haivision StreamHub

Receiver/gateway.

Haivision MoJoPro

Live broadcast app.

Haivision Hub 360

Master control.

Contribute pristine-quality video over any network including internet, satellite, 4G, and 5G.

Command 360

Video wall software.

Video Processor

Audiovisual Components

Portable Systems

Display critical content with our video wall solution portfolio, including software, video processors, and encoders.

Video Transcoder

Makito X4 Rugged

Makito X1 Rugged

Haivision EMS

Element manager.

Deliver secure, ultra-low latency video in the most demanding environments with military-grade ISR solutions.

Haivision Media Platform

Iptv system.

Haivision Play

Set-top box.

Makito X H.264

Haivision Play Pro

Mobile player app.

Distribute broadcast channels and live video to browsers, set-top boxes, and mobile devices, across multiple locations.

Haivision SRT Gateway

Ip video gateway, free srt player app.

SRT Video Streaming

Open source protocol.

Reliably stream secure, high-quality, and low latency video with SRT encoders, gateways, and transmitters.

Video Decoder

Transport secure, low latency, and high-quality video with our range of video encoders and transmitters.

- Government & Defense

- Public Safety

- White Papers

- Haivision Podcast

- Case Studies

- Play Pro Settings Tool

- Streaming Calculator

- Documentation

- Video Walls 101

- Streaming Video Glossary

- About Haivision

- Leadership Team

- Investor Relations

- Technology Partners

- Press Releases

- Partner Portal

- SRT Alliance

- Haivision Hub – Sign In

- EN FR DE ES PT

How to Configure SRT Settings on Your Video Encoder for Optimal Performance

Are you new to configuring SRT streams? We’ve put together this quick guide to help you learn the basics of how to configure and tune SRT settings for optimizing stream performance for your specific use case. In this blog post, we’re providing a 7-point checklist for configuring an SRT stream using a Haivision Makito X4 video encoder as a source and a Makito X4 video decoder as the destination device.

Let’s start off with a quick reminder of what SRT is and what it does.

SRT Fundamentals

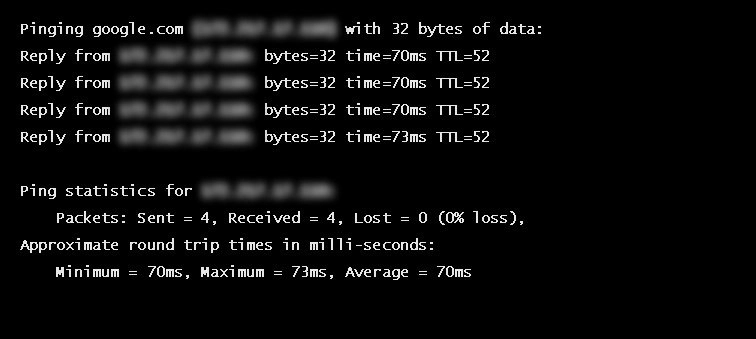

As the public internet started to gain in availability and bandwidth, more people attempted to leverage it for streaming live video but overcoming issues around packet loss and latency proved extremely challenging. The internet is very unpredictable, and between any two points, bandwidth can vary enormously, as can the rate of packet loss, jitter due to timing issues, and latency depending on distance and routes.

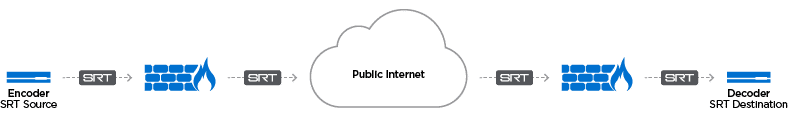

SRT (Secure Reliable Transport) was specifically designed to address these issues and the purpose of the protocol is very simple – to reliably get video content from point A to point B over the internet and protect it with encryption.

SRT enables streamers to tune latency all the way down to 10s of milliseconds for cross-continental video links – a critical feature that enables workflows for interactive, bi-directional interviews and remote production for example.

SRT Statistics: Know Your Network

Not only does SRT enable the secure transport of your video content, it constantly monitors and measures the bandwidth between the two endpoints, providing a whole host of useful statistics, from the number of lost packets to the estimated link bandwidth, latency, and round-trip time.

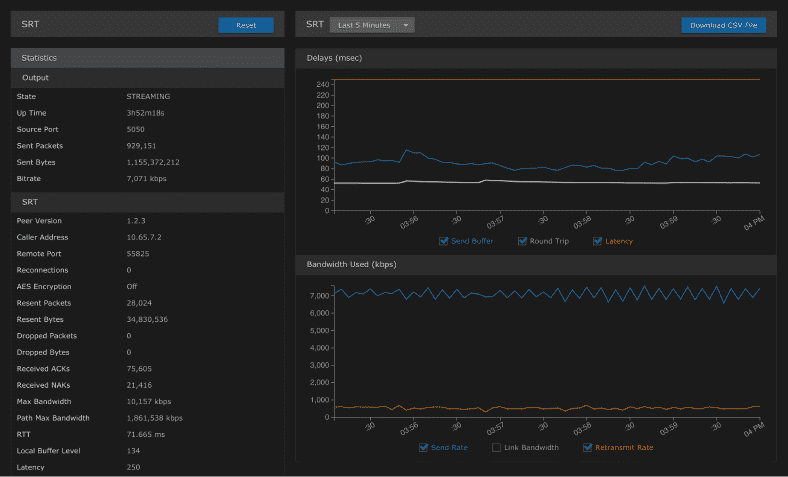

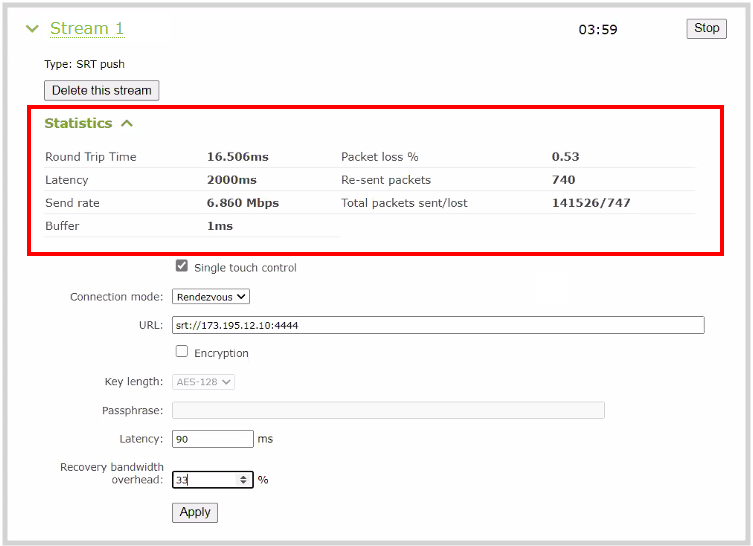

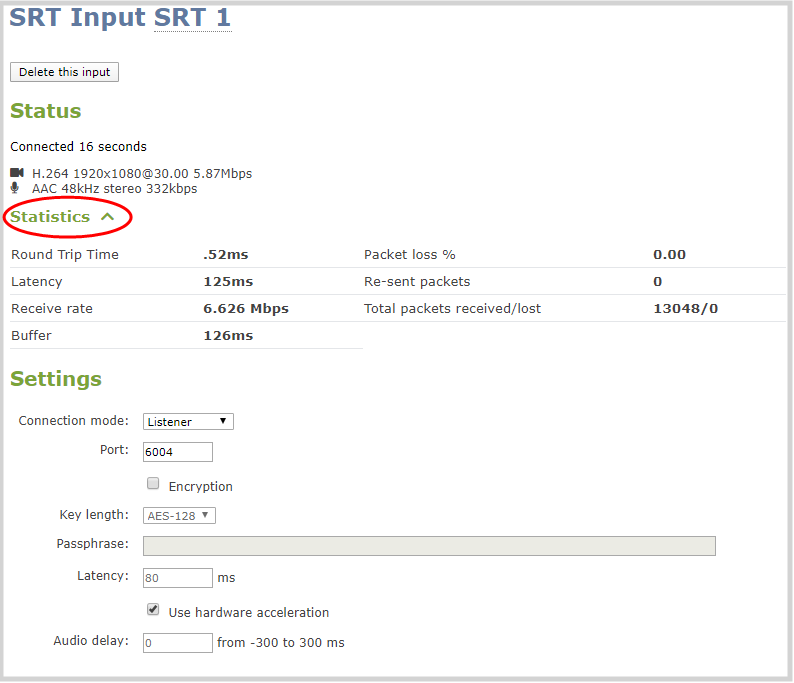

Makito X4 video encoder graphical statistics display

The statistics generated provide valuable insight into your network and stream’s conditions. Armed with a deeper understanding of these statistics, you can better tune and optimize your SRT streaming performance.

SRT Configuration and Tuning Checklist

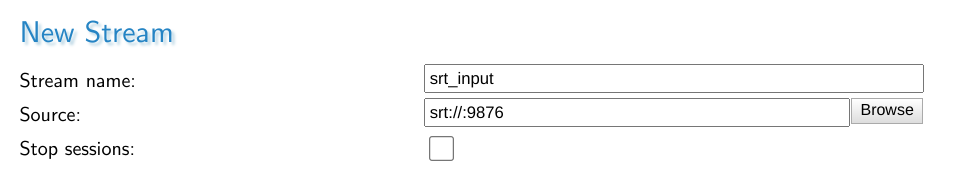

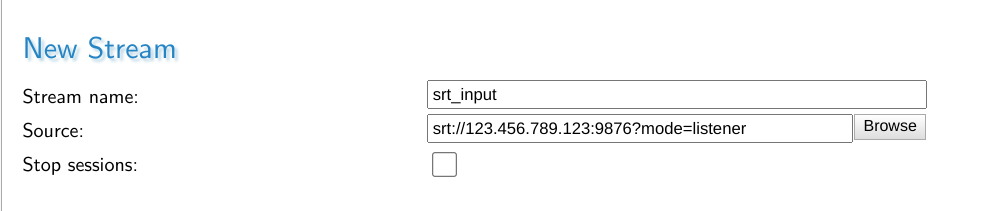

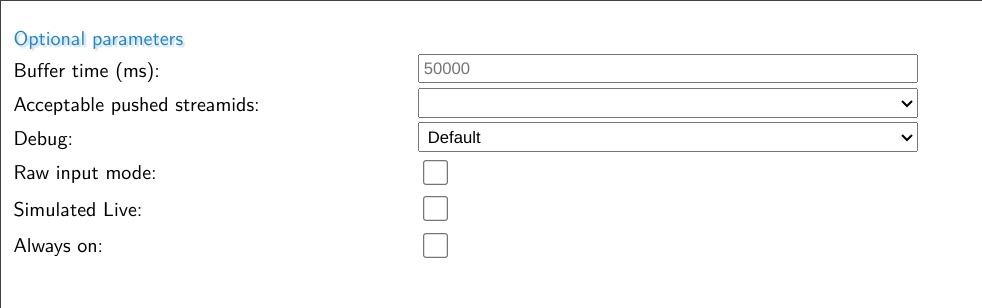

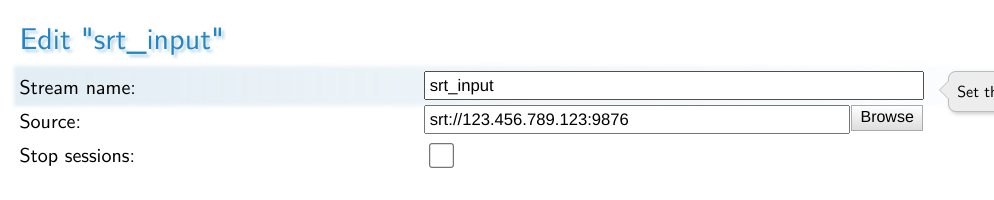

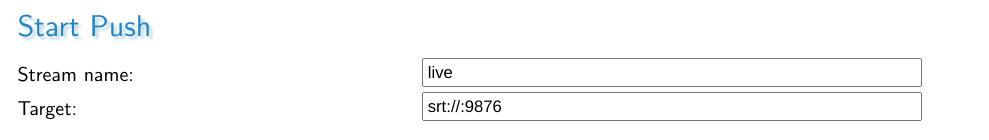

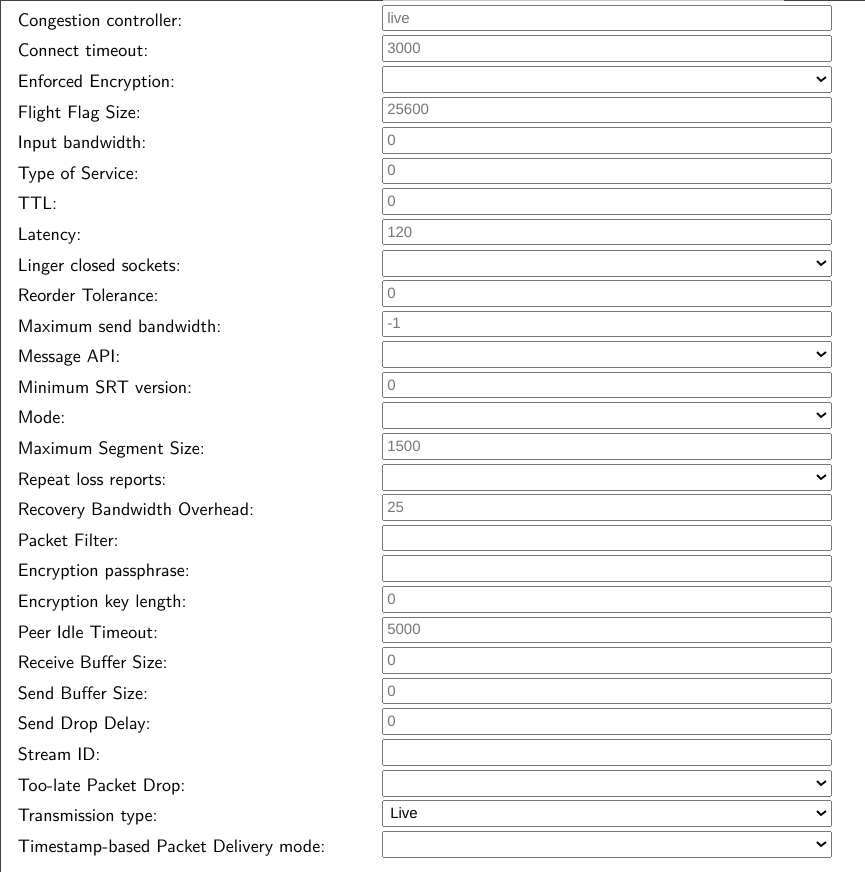

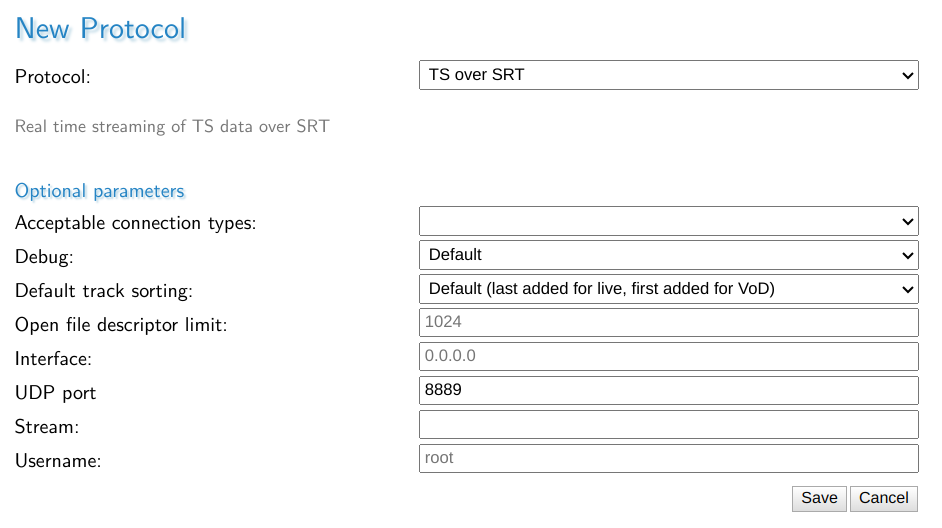

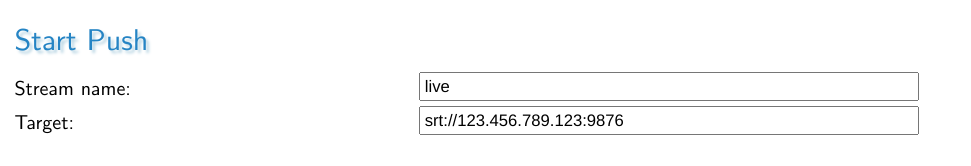

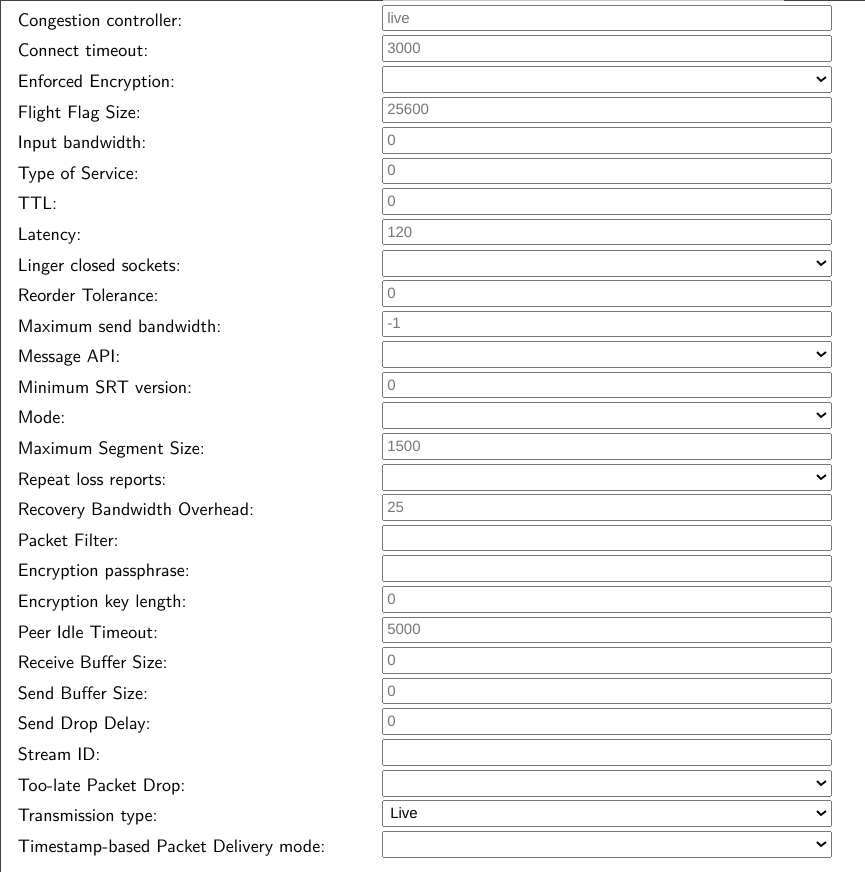

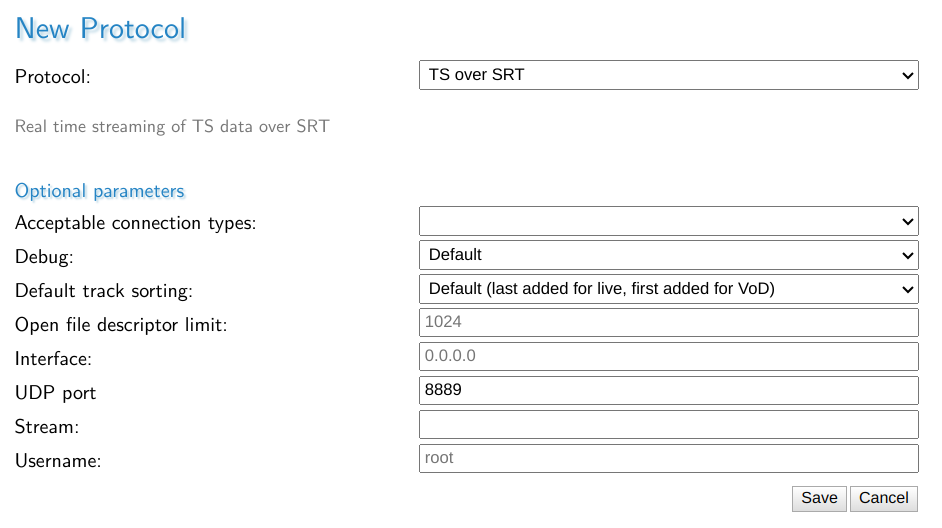

With your source and destination devices set up – including established call modes (listener, caller, rendezvous) and firewall settings – follow these 7 steps to configure an SRT stream:

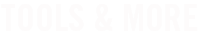

#1. Measure the round-trip time (RTT)

Also called round-trip delay, RTT (measured in milliseconds) is the time required for a packet to travel from a source to a specific destination and back again. RTT is used as a guide when configuring bandwidth overhead and latency.

To determine the RTT between two devices, you can use the ping command or, if ping does not work or is not available, set up a test SRT stream and use the RTT value from the statistics page.

If the RTT is <= 20 ms, then use 20 ms for the RTT value. This is because SRT does not respond to events on time scales shorter than 20 ms.

#2. Calculate the packet loss rate

Packet loss rate is a measure of network congestion, expressed as a percentage of packets lost with respect to packets sent. A channel’s packet loss rate drives the SRT latency and bandwidth overhead calculations and can be extracted from iperf statistics.

If using iperf is not possible, set up a test SRT stream, and then use the resent bytes / sent bytes reported on the SRT stream’s statistics page over a 60 second period to calculate the packet loss rate as follows:

Packet loss rate = resent bytes ÷ sent bytes * 100

#3. Calculate the RTT multiplier and bandwidth overhead values

The RTT multiplier is a value used in the calculation of SRT latency. It reflects the relationship between the degree of congestion on a network and the RTT. The bandwidth overhead is the portion of the total bandwidth of a stream that is required for the exchange of SRT control and recovered packets.

It’s worth noting that the range of the RTT multiplier is from 3 to 20. Anything below 3 is too small for SRT to be effective and anything above 20 implies a network with 100% packet loss.

Find the RTT multiplier and bandwidth overhead values that correspond to your measured packet loss rate using the table below:

#4. Calculate SRT Latency

Determine your SRT latency value using the following formula:

SRT latency = RTT multiplier * RTT

If RTT < 20ms, use the minimum SRT latency value in the table above.

#5. Measure the nominal channel capacity

Using the iperf utility measure the nominal channel capacity available to the SRT stream.

If iperf does not work or is not available, set up a test SRT stream and use the max bandwidth or path max bandwidth value from the statistics page.

#6. Determine the stream bitrate

The steam bitrate is the sum of the video, audio, and metadata essence bit rates, plus an SRT protocol overhead. It must the following constraint:

Channel capacity > SRT stream bandwidth * (100 + bandwidth overhead) ÷ 100

If this is not respected, then the video/audio/metadata bitrate must be reduced until it is respected. It’s recommended that a significant amount of headroom be added to cushion against varying channel capacity, so a more conservative constraint would be:

0.75 * channel capacity > SRT stream bandwidth * (100 + bandwidth overhead) ÷ 100

#7. Verify that the SRT stream has been set up correctly

The best way to determine this is to set up a test SRT stream and look at the SRT send buffer graph on the statistics page of the source device. The send buffer value should never exceed the SRT latency bound. If the two plot lines are close, increase the SRT latency

SRT Protocol Technical Overview

For a deeper dive into configuring and tuning SRT for your use case, download the SRT Protocol Technical Overview.

Share this post

Lina Nikols

Lina Nikols is a seasoned content specialist with almost 20 years of combined copywriting and marketing experience. Well versed in writing about a broad range of subjects, from consumer technology to enterprise software solutions, Lina is passionate about creating clear, no-fuss content that zeroes in on the wants and needs of her readers. When she’s not writing or researching, she loves running (very slowly), photography and tea drinking.

- All Encoders & Transmitters

The SRT Protocol

This document specifies Secure Reliable Transport (SRT) protocol. SRT is a user-level protocol over User Datagram Protocol and provides reliability and security optimized for low latency live video streaming, as well as generic bulk data transfer. For this, SRT introduces control packet extension, improved flow control, enhanced congestion control and a mechanism for data encryption. ¶

Note to Readers

Source for this draft and an issue tracker can be found at https://github.com/haivision/srt-rfc . ¶

Status of This Memo

This Internet-Draft is submitted in full conformance with the provisions of BCP 78 and BCP 79. ¶

Internet-Drafts are working documents of the Internet Engineering Task Force (IETF). Note that other groups may also distribute working documents as Internet-Drafts. The list of current Internet-Drafts is at https://datatracker.ietf.org/drafts/current/ . ¶

Internet-Drafts are draft documents valid for a maximum of six months and may be updated, replaced, or obsoleted by other documents at any time. It is inappropriate to use Internet-Drafts as reference material or to cite them other than as "work in progress." ¶

This Internet-Draft will expire on 11 July 2024. ¶

Copyright Notice

Copyright (c) 2024 IETF Trust and the persons identified as the document authors. All rights reserved. ¶

This document is subject to BCP 78 and the IETF Trust's Legal Provisions Relating to IETF Documents ( https://trustee.ietf.org/license-info ) in effect on the date of publication of this document. Please review these documents carefully, as they describe your rights and restrictions with respect to this document. ¶

Table of Contents

1. introduction, 1.1. motivation.

The demand for live video streaming has been increasing steadily for many years. With the emergence of cloud technologies, many video processing pipeline components have transitioned from on-premises appliances to software running on cloud instances. While real-time streaming over TCP-based protocols like RTMP [ RTMP ] is possible at low bitrates and on a small scale, the exponential growth of the streaming market has created a need for more powerful solutions. ¶

To improve scalability on the delivery side, content delivery networks (CDNs) at one point transitioned to segmentation-based technologies like HLS (HTTP Live Streaming) [ RFC8216 ] and DASH (Dynamic Adaptive Streaming over HTTP) [ ISO23009 ] . This move increased the end-to-end latency of live streaming to over few tens of seconds, which makes it unattractive for specific use cases where real-time is important. Over time, the industry optimized these delivery methods, bringing the latency down to few seconds. ¶

While the delivery side scaled up, improvements to video transcoding became a necessity. Viewers watch video streams on a variety of different devices, connected over different types of networks. Since upload bandwidth from on-premises locations is often limited, video transcoding moved to the cloud. ¶

RTMP became the de facto standard for contribution over the public Internet. But there are limitations for the payload to be transmitted, since RTMP as a media specific protocol only supports two audio channels and a restricted set of audio and video codecs, lacking support for newer formats such as HEVC [ H.265 ] , VP9 [ VP9 ] , or AV1 [ AV1 ] . ¶

Since RTMP, HLS and DASH rely on TCP, these protocols can only guarantee acceptable reliability over connections with low RTTs, and can not use the bandwidth of network connections to their full extent due to limitations imposed by congestion control. Notably, QUIC [ RFC9000 ] has been designed to address these problems with HTTP-based delivery protocols in HTTP/3 [ RFC9114 ] . Like QUIC, SRT [ SRTSRC ] uses UDP instead of the TCP transport protocol, but assures more reliable delivery using Automatic Repeat Request (ARQ), packet acknowledgments, end-to-end latency management, etc. ¶

1.2. Secure Reliable Transport Protocol

Low latency video transmissions across reliable (usually local) IP based networks typically take the form of MPEG-TS [ ISO13818-1 ] unicast or multicast streams using the UDP/RTP protocol, where any packet loss can be mitigated by enabling forward error correction (FEC). Achieving the same low latency between sites in different cities, countries or even continents is more challenging. While it is possible with satellite links or dedicated MPLS [ RFC3031 ] networks, these are expensive solutions. The use of public Internet connectivity, while less expensive, imposes significant bandwidth overhead to achieve the necessary level of packet loss recovery. Introducing selective packet retransmission (reliable UDP) to recover from packet loss removes those limitations. ¶

Derived from the UDP-based Data Transfer (UDT) protocol [ GHG04b ] , SRT is a user-level protocol that retains most of the core concepts and mechanisms while introducing several refinements and enhancements, including control packet modifications, improved flow control for handling live streaming, enhanced congestion control, and a mechanism for encrypting packets. ¶

SRT is a transport protocol that enables the secure, reliable transport of data across unpredictable networks, such as the Internet. While any data type can be transferred via SRT, it is ideal for low latency (sub-second) video streaming. SRT provides improved bandwidth utilization compared to RTMP, allowing much higher contribution bitrates over long distance connections. ¶

As packets are streamed from source to destination, SRT detects and adapts to the real-time network conditions between the two endpoints, and helps compensate for jitter and bandwidth fluctuations due to congestion over noisy networks. Its error recovery mechanism minimizes the packet loss typical of Internet connections. ¶

To achieve low latency streaming, SRT had to address timing issues. The characteristics of a stream from a source network are completely changed by transmission over the public Internet, which introduces delays, jitter, and packet loss. This, in turn, leads to problems with decoding, as the audio and video decoders do not receive packets at the expected times. The use of large buffers helps, but latency is increased. SRT includes a mechanism to keep a constant end-to-end latency, thus recreating the signal characteristics on the receiver side, and reducing the need for buffering. ¶

Like TCP, SRT employs a listener/caller model. The data flow is bi-directional and independent of the connection initiation - either the sender or receiver can operate as listener or caller to initiate a connection. The protocol provides an internal multiplexing mechanism, allowing multiple SRT connections to share the same UDP port, providing access control functionality to identify the caller on the listener side. ¶

Supporting forward error correction (FEC) and selective packet retransmission (ARQ), SRT provides the flexibility to use either of the two mechanisms or both combined, allowing for use cases ranging from the lowest possible latency to the highest possible reliability. ¶

SRT maintains the ability for fast file transfers introduced in UDT, and adds support for AES encryption. ¶

2. Terms and Definitions

The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "NOT RECOMMENDED", "MAY", and "OPTIONAL" in this document are to be interpreted as described in BCP 14 [ RFC2119 ] [ RFC8174 ] when, and only when, they appear in all capitals, as shown here. ¶

The Secure Reliable Transport protocol described by this document. ¶

Pseudo-Random Number Generator. ¶

3. Packet Structure

SRT packets are transmitted as UDP payload [ RFC0768 ] . Every UDP packet carrying SRT traffic contains an SRT header immediately after the UDP header ( Figure 1 ). ¶

SRT has two types of packets distinguished by the Packet Type Flag: data packet and control packet. ¶

The structure of the SRT packet is shown in Figure 2 . ¶

Packet Type Flag. The control packet has this flag set to "1". The data packet has this flag set to "0". ¶

The timestamp of the packet, in microseconds. The value is relative to the time the SRT connection was established. Depending on the transmission mode ( Section 4.2 ), the field stores the packet send time or the packet origin time. ¶

A fixed-width field providing the SRT socket ID to which a packet should be dispatched. The field may have the special value "0" when the packet is a connection request. ¶

3.1. Data Packets

The structure of the SRT data packet is shown in Figure 3 . ¶

The sequential number of the data packet. Range [0; 2^31 - 1]. ¶

Packet Position Flag. This field indicates the position of the data packet in the message. The value "10b" (binary) means the first packet of the message. "00b" indicates a packet in the middle. "01b" designates the last packet. If a single data packet forms the whole message, the value is "11b". ¶

Order Flag. Indicates whether the message should be delivered by the receiver in order (1) or not (0). Certain restrictions apply depending on the data transmission mode used ( Section 4.2 ). ¶

Key-based Encryption Flag. The flag bits indicate whether or not data is encrypted. The value "00b" (binary) means data is not encrypted. "01b" indicates that data is encrypted with an even key, and "10b" is used for odd key encryption. Refer to Section 6 . The value "11b" is only used in control packets. ¶

Retransmitted Packet Flag. This flag is clear when a packet is transmitted the first time. The flag is set to "1" when a packet is retransmitted. ¶

The sequential number of consecutive data packets that form a message (see PP field). ¶

See Section 3 . ¶

The payload of the data packet. The length of the data is the remaining length of the UDP packet. ¶

The message authentication tag (AES-GCM). The field is only present if AES-GCM crypto mode has been negotiated. ¶

3.2. Control Packets

An SRT control packet has the following structure. ¶

Control Packet Type. The use of these bits is determined by the control packet type definition. See Table 1 . ¶

This field specifies an additional subtype for specific packets. See Table 1 . ¶

The use of this field depends on the particular control packet type. Handshake packets do not use this field. ¶

The use of this field is defined by the Control Type field of the control packet. ¶

The types of SRT control packets are shown in Table 1 . The value "0x7FFF" is reserved for a user-defined type. ¶

3.2.1. Handshake

Handshake control packets (Control Type = 0x0000) are used to exchange peer configurations, to agree on connection parameters, and to establish a connection. ¶

The Type-specific Information field is unused in the case of the HS message. The Control Information Field (CIF) of a handshake control packet is shown in Figure 5 . ¶

The handshake version number. Currently used values are 4 and 5. Values greater than 5 are reserved for future use. ¶

Block cipher family and key size. The values of this field are described in Table 2 . The default value is 0 (no encryption advertised). If neither peer advertises encryption, AES-128 is selected by default (see Section 4.3 ). ¶

This field is message specific extension related to Handshake Type field. The value MUST be set to 0 except for the following cases. ¶

(1) If the handshake control packet is the INDUCTION message, this field is sent back by the Listener. (2) In the case of a CONCLUSION message, this field value should contain a combination of Extension Type values. ¶

For more details, see Section 4.3.1 . ¶

The sequence number of the very first data packet to be sent. ¶

Maximum Transmission Unit (MTU) size, in bytes. This value is typically set to 1500 bytes, which is the default MTU size for Ethernet, but can be less. ¶

The value of this field is the maximum number of data packets allowed to be "in flight" (i.e. the number of sent packets for which an ACK control packet has not yet been received). ¶

This field indicates the handshake packet type. The possible values are described in Table 4 . For more details refer to Section 4.3 . ¶

This field holds the ID of the source SRT socket from which a handshake packet is issued. ¶

Randomized value for processing a handshake. The value of this field is specified by the handshake message type. See Section 4.3 . ¶

IPv4 or IPv6 address of the packet's sender. The value consists of four 32-bit fields. In the case of IPv4 addresses, fields 2, 3 and 4 are filled with zeroes. ¶

The value of this field is used to process an integrated handshake. Each extension can have a pair of request and response types. ¶

The length of the Extension Contents field in four-byte blocks. ¶

The payload of the extension. ¶

3.2.1.1. Handshake Extension Message

In a Handshake Extension, the value of the Extension Field of the handshake control packet is defined as 1 for a Handshake Extension request (SRT_CMD_HSREQ in Table 5 ), and 2 for a Handshake Extension response (SRT_CMD_HSRSP in Table 5 ). ¶

The Extension Contents field of a Handshake Extension Message is structured as follows: ¶

SRT library version MUST be formed as major * 0x10000 + minor * 0x100 + patch. ¶

SRT configuration flags (see Section 3.2.1.1.1 ). ¶

Timestamp-Based Packet Delivery (TSBPD) Delay of the receiver, in milliseconds. Refer to Section 4.5 . ¶

TSBPD of the sender, in milliseconds. Refer to Section 4.5 . ¶

3.2.1.1.1. Handshake Extension Message Flags

TSBPDSND flag defines if the TSBPD mechanism ( Section 4.5 ) will be used for sending. ¶

TSBPDRCV flag defines if the TSBPD mechanism ( Section 4.5 ) will be used for receiving. ¶

CRYPT flag MUST be set. It is a legacy flag that indicates the party understands KK field of the SRT Packet ( Figure 3 ). ¶

TLPKTDROP flag should be set if too-late packet drop mechanism will be used during transmission. See Section 4.6 . ¶

PERIODICNAK flag set indicates the peer will send periodic NAK packets. See Section 4.8.2 . ¶

REXMITFLG flag MUST be set. It is a legacy flag that indicates the peer understands the R field of the SRT DATA Packet ( Figure 3 ). ¶

STREAM flag identifies the transmission mode ( Section 4.2 ) to be used in the connection. If the flag is set, the buffer mode ( Section 4.2.2 ) is used. Otherwise, the message mode ( Section 4.2.1 ) is used. ¶

PACKET_FILTER flag indicates if the peer supports packet filter. ¶

3.2.1.2. Key Material Extension Message

If an encrypted connection is being established, the Key Material (KM) is first transmitted as a Handshake Extension message. This extension is not supplied for unprotected connections. The purpose of the extension is to let peers exchange and negotiate encryption-related information to be used to encrypt and decrypt the payload of the stream. ¶

The extension can be supplied with the Handshake Extension Type field set to either SRT_CMD_KMREQ or SRT_CMD_KMRSP (see Table 5 in Section 3.2.1 ). For more details refer to Section 4.3 . ¶

The KM message is placed in the Extension Contents. See Section 3.2.2 for the structure of the KM message. ¶

In case of SRT_CMD_KMRSP the Extension Length value can be equal to 1 (meaning 4 bytes). It is an indication of encryption failure. In this case the Extension Content has a different format, Figure 7 . ¶

Key Material State of the peer: ¶

NOTE: In the descriptions below, "peer" refers to the remote SRT side sending the KM response, and "agent" refers to the local side interpreting the KM response. ¶

0: unsecured (the peer will encrypt the payload, while the agent has not declared any encryption), ¶

3: no secret (the peer does not have the key to decrypt the incoming payload), ¶

4: bad secret (the peer has the wrong key and can't decrypt the incoming payload), ¶

5: bad crypto mode (the peer expects to use a different cryptographic mode). Since protocol v1.6. ¶

3.2.1.3. Stream ID Extension Message

The Stream ID handshake extension message can be used to identify the stream content. The Stream ID value can be free-form, but there is also a recommended convention that can be used to achieve interoperability. ¶

The Stream ID handshake extension message has SRT_CMD_SID extension type (see Table 5 . The extension contents are a sequence of UTF-8 characters. The maximum allowed size of the StreamID extension is 512 bytes. ¶

The Extension Contents field holds a sequence of UTF-8 characters (see Figure 8 ). The maximum allowed size of the StreamID extension is 512 bytes. The actual size is determined by the Extension Length field ( Figure 5 ), which defines the length in four byte blocks. If the actual payload is less than the declared length, the remaining bytes are set to zeros. ¶

The content is stored as 32-bit little endian words. ¶

3.2.1.4. Group Membership Extension

The Group Membership handshake extension is reserved for the future and is going to be used to allow multipath SRT connections. ¶

The identifier of a group whose members include the sender socket that is making a connection. The target socket that is interpreting GroupID SHOULD belong to the corresponding group on the target side. If such a group does not exist, the target socket MAY create it. ¶

Group type, as per SRT_GTYPE_ enumeration: ¶

0: undefined group type, ¶

1: broadcast group type, ¶

2: main/backup group type, ¶

3: balancing group type, ¶

4: multicast group type (reserved for future use). ¶

Special flags mostly reserved for the future. See Figure 10 . ¶

Special value with interpretation depending on the Type field value: ¶

Not used with broadcast group type, ¶

Defines the link priority for main/backup group type, ¶

Not yet defined for any other cases (reserved for future use). ¶

When set, defines synchronization on message numbers, otherwise transmission is synchronized on sequence numbers. ¶

3.2.2. Key Material

The purpose of the Key Material Message is to let peers exchange encryption-related information to be used to encrypt and decrypt the payload of the stream. ¶

This message can be supplied in two possible ways: ¶

as a Handshake Extension (see Section 3.2.1.2 ) ¶

in the Content Information Field of the User-Defined control packet (described below). ¶

When the Key Material is transmitted as a control packet, the Control Type field of the SRT packet header is set to User-Defined Type (see Table 1 ), the Subtype field of the header is set to SRT_CMD_KMREQ for key-refresh request and SRT_CMD_KMRSP for key-refresh response ( Table 5 ). The KM Refresh mechanism is described in Section 6.1.6 . ¶

The structure of the Key Material message is illustrated in Figure 11 . ¶

This is a fixed-width field that is reserved for future usage. ¶

This is a fixed-width field that indicates the KM message version: ¶

1: Initial KM message format version. ¶

This is a fixed-width field that indicates the Packet Type: ¶

0: Reserved ¶

1: Media Stream Message (MSmsg) ¶

2: Keying Material Message (KMmsg) ¶

7: Reserved to discriminate MPEG-TS packet (0x47=sync byte). ¶

This is a fixed-width field that contains the signature ‘HAI‘ encoded as a PnP Vendor ID [ PNPID ] (in big-endian order). ¶

This is a fixed-width field reserved for flag extension or other usage. ¶

This is a fixed-width field that indicates which SEKs (odd and/or even) are provided in the extension: ¶

00b: No SEK is provided (invalid extension format); ¶

01b: Even key is provided; ¶

10b: Odd key is provided; ¶

11b: Both even and odd keys are provided. ¶

This is a fixed-width field for specifying the KEK index (big-endian order) was used to wrap (and optionally authenticate) the SEK(s). The value 0 is used to indicate the default key of the current stream. Other values are reserved for the possible use of a key management system in the future to retrieve a cryptographic context. ¶

0: Default stream associated key (stream/system default) ¶

1..255: Reserved for manually indexed keys. ¶

This is a fixed-width field for specifying encryption cipher and mode: ¶

0: None or KEKI indexed crypto context; ¶

1: AES-ECB (Reserved, not supported); ¶

2: AES-CTR [ SP800-38A ] ; ¶

3: AES-CBC (Reserved, not supported); ¶

4: AES-GCM (Galois Counter Mode), starting from v1.6.0. ¶

If AES-GCM is set as the cipher, AES-GCM MUST also be set as the message authentication code algorithm (the Auth field). ¶

This is a fixed-width field for specifying a message authentication code (MAC) algorithm: ¶

1: AES-GCM, starting from v1.6.0. ¶

If AES-GCM is selected as the MAC algorithm, it MUST also be selected as the cipher. ¶

This is a fixed-width field for describing the stream encapsulation: ¶

0: Unspecified or KEKI indexed crypto context ¶

1: MPEG-TS/UDP ¶

2: MPEG-TS/SRT. ¶

This is a fixed-width field reserved for future use. ¶

This is a fixed-width field for specifying salt length SLen in bytes divided by 4. Can be zero if no salt/IV present. The only valid length of salt defined is 128 bits. ¶

This is a fixed-width field for specifying SEK length in bytes divided by 4. Size of one key even if two keys present. MUST match the key size specified in the Encryption Field of the handshake packet Table 2 . ¶

This is a variable-width field that complements the keying material by specifying a salt key. ¶

This is a variable-width field for specifying Wrapped key(s), where n = (KK + 1)/2 and the size of the wrap field is ((n * KLen) + 8) bytes. ¶

64-bit Integrity Check Vector(AES key wrap integrity). This field is used to detect if the keys were unwrapped properly. If the KEK in hand is invalid, validation fails and unwrapped keys are discarded. ¶

This field identifies an odd or even SEK. If only one key is present, the bit set in the KK field tells which SEK is provided. If both keys are present, then this field is eSEK (even key) and it is followed by odd key oSEK. The length of this field is calculated as KLen * 8. ¶

This field with the odd key is present only when the message carries the two SEKs (identified by he KK field). ¶

3.2.3. Keep-Alive

Keep-alive control packets are sent after a certain timeout from the last time any packet (Control or Data) was sent. The purpose of this control packet is to notify the peer to keep the connection open when no data exchange is taking place. ¶

The default timeout for a keep-alive packet to be sent is 1 second. ¶

An SRT keep-alive packet is formatted as follows: ¶

The packet type value of a keep-alive control packet is "1". ¶

The control type value of a keep-alive control packet is "1". ¶

This field is reserved for future definition. ¶

Keep-alive controls packet do not contain Control Information Field (CIF). ¶

3.2.4. ACK (Acknowledgment)

Acknowledgment (ACK) control packets are used to provide the delivery status of data packets. By acknowledging the reception of data packets up to the acknowledged packet sequence number, the receiver notifies the sender that all prior packets were received or, in the case of live streaming ( Section 4.2 , Section 7.1 ), preceding missing packets (if any) were dropped as too late to be delivered ( Section 4.6 ). ¶

ACK packets may also carry some additional information from the receiver like the estimates of RTT, RTT variance, link capacity, receiving speed, etc. The CIF portion of the ACK control packet is expanded as follows: ¶

The packet type value of an ACK control packet is "1". ¶

The control type value of an ACK control packet is "2". ¶

This field contains the sequential number of the full acknowledgment packet starting from 1, except in the case of Light ACKs and Small ACKs, where this value is 0 (see below). ¶

This field contains the sequence number of the last data packet being acknowledged plus one. In other words, if it the sequence number of the first unacknowledged packet. ¶

RTT value, in microseconds, estimated by the receiver based on the previous ACK/ACKACK packet pair exchange. ¶

The variance of the RTT estimate, in microseconds. ¶

Available size of the receiver's buffer, in packets. ¶

The rate at which packets are being received, in packets per second. ¶

Estimated bandwidth of the link, in packets per second. ¶

Estimated receiving rate, in bytes per second. ¶

There are several types of ACK packets: ¶

A Full ACK control packet is sent every 10 ms and has all the fields of Figure 14 . ¶

A Light ACK control packet includes only the Last Acknowledged Packet Sequence Number field. The Type-specific Information field should be set to 0. ¶

A Small ACK includes the fields up to and including the Available Buffer Size field. The Type-specific Information field should be set to 0. ¶

The sender only acknowledges the receipt of Full ACK packets (see Section 3.2.8 ). ¶

The Light ACK and Small ACK packets are used in cases when the receiver should acknowledge received data packets more often than every 10 ms. This is usually needed at high data rates. It is up to the receiver to decide the condition and the type of ACK packet to send (Light or Small). The recommendation is to send a Light ACK for every 64 packets received. ¶

3.2.5. NAK (Negative Acknowledgement or Loss Report)

Negative acknowledgment (NAK) control packets are used to signal failed data packet deliveries. The receiver notifies the sender about lost data packets by sending a NAK packet that contains a list of sequence numbers for those lost packets. ¶

An SRT NAK packet is formatted as follows: ¶

The packet type value of a NAK control packet is "1". ¶

The control type value of a NAK control packet is "3". ¶

A single value or a range of lost packets sequence numbers. See packet sequence number coding in Appendix A . ¶

3.2.6. Congestion Warning

The Congestion Warning control packet is reserved for future use. Its purpose is to allow a receiver to signal a sender that there is congestion happening at the receiving side. The expected behaviour is that upon receiving this packet the sender slows down its sending rate by increasing the minimum inter-packet sending interval by a discrete value (posited to be 12.5%). ¶

Note that the conditions for a receiver to issue this type of packet are not yet defined. ¶

The packet type value of a Congestion Warning control packet is "1". ¶

The control type value of a Congestion Warning control packet is "4". ¶

3.2.7. Shutdown

Shutdown control packets are used to initiate the closing of an SRT connection. ¶

An SRT shutdown control packet is formatted as follows: ¶

The packet type value of a shutdown control packet is "1". ¶

The control type value of a shutdown control packet is "5". ¶

Shutdown control packets do not contain Control Information Field (CIF). ¶

3.2.8. ACKACK (Acknowledgement of Acknowledgement)

Acknowledgement of Acknowledgement (ACKACK) control packets are sent to acknowledge the reception of a Full ACK and used in the calculation of the round-trip time by the SRT receiver. ¶

An SRT ACKACK control packet is formatted as follows: ¶

The packet type value of an ACKACK control packet is "1". ¶

The control type value of an ACKACK control packet is "6". ¶

This field contains the Acknowledgement Number of the full ACK packet the reception of which is being acknowledged by this ACKACK packet. ¶

ACKACK control packets do not contain Control Information Field (CIF). ¶

3.2.9. Message Drop Request

A Message Drop Request control packet is sent by the sender to the receiver when a retransmission of an unacknowledged packet (forming a whole or a part of a message) which is not present in the sender's buffer is requested. This may happen, for example, when a TTL parameter (passed in the sending function) triggers a timeout for retransmitting one or more lost packets which constitute parts of a message, causing these packets to be removed from the sender's buffer. ¶

The sender notifies the receiver that it must not wait for retransmission of this message. Note that a Message Drop Request control packet is not sent if the Too Late Packet Drop mechanism ( Section 4.6 ) causes the sender to drop a message, as in this case the receiver is expected to drop it anyway. ¶

A Message Drop Request contains the message number and corresponding range of packet sequence numbers which form the whole message. If the sender does not already have in its buffer the specific packet or packets for which retransmission was requested, then it is unable to restore the message number. In this case the Message Number field must be set to zero, and the receiver should drop packets in the provided packet sequence number range. ¶

The packet type value of a Drop Request control packet is "1". ¶

The control type value of a Drop Request control packet is "7". ¶

The identifying number of the message requested to be dropped. See the Message Number field in Section 3.1 . ¶

The sequence number of the first packet in the message. ¶

The sequence number of the last packet in the message. ¶

3.2.10. Peer Error

The Peer Error control packet is sent by a receiver when a processing error (e.g. write to disk failure) occurs. This informs the sender of the situation and unblocks it from waiting for further responses from the receiver. ¶

The sender receiving this type of control packet must unblock any sending operation in progress. ¶

NOTE : This control packet is only used if the File Transfer Congestion Control ( Section 5.2 ) is enabled. ¶

The packet type value of a Peer Error control packet is "1". ¶

The control type value of a Peer Error control packet is "8". ¶

Peer error code. At the moment the only value defined is 4000 - file system error. ¶

4. SRT Data Transmission and Control

This section describes key concepts related to the handling of control and data packets during the transmission process. ¶

After the handshake and exchange of capabilities is completed, packet data can be sent and received over the established connection. To fully utilize the features of low latency and error recovery provided by SRT, the sender and receiver must handle control packets, timers, and buffers for the connection as specified in this section. ¶

4.1. Stream Multiplexing

Multiple SRT sockets may share the same UDP socket so that the packets received to this UDP socket will be correctly dispatched to those SRT sockets they are currently destined. ¶

During the handshake, the parties exchange their SRT Socket IDs. These IDs are then used in the Destination SRT Socket ID field of every control and data packet (see Section 3 ). ¶

4.2. Data Transmission Modes

There are two data transmission modes supported by SRT: message mode ( Section 4.2.1 ) and buffer mode ( Section 4.2.2 ). These are the modes originally defined in the UDT protocol [ GHG04b ] . ¶

As SRT has been mainly designed for live video and audio streaming, its main and default transmission mode is message mode with certain settings applied ( Section 7.1 ). ¶

Besides live streaming, SRT maintains the ability for fast file transfers introduced in UDT ( Section 7.2 ). The usage of both message and buffer modes is possible in this case. ¶

Best practices and configuration tips for both use cases can be found in Section 7 . ¶

4.2.1. Message Mode

When the STREAM flag of the handshake Extension Message Section 3.2.1.1 is set to 0, the protocol operates in Message mode, characterized as follows: ¶

Every packet has its own Packet Sequence Number. ¶

One or several consecutive SRT data packets can form a message. ¶

All the packets belonging to the same message have a similar message number set in the Message Number field. ¶

The first packet of a message has the first bit of the Packet Position Flags ( Section 3.1 ) set to 1. The last packet of the message has the second bit of the Packet Position Flags set to 1. Thus, a PP equal to "11b" indicates a packet that forms the whole message. A PP equal to "00b" indicates a packet that belongs to the inner part of the message. ¶

The concept of the message in SRT comes from UDT [ GHG04b ] . In this mode, a single sending instruction passes exactly one piece of data that has boundaries (a message). This message may span multiple UDP packets and multiple SRT data packets. The only size limitation is that it shall fit as a whole in the buffers of the sender and the receiver. Although internally all operations (e.g., ACK, NAK) on data packets are performed independently, an application must send and receive the whole message. Until the message is complete (all packets are received) the application will not be allowed to read it. ¶

When the Order Flag of a data packet is set to 1, this imposes a sequential reading order on messages. An Order Flag set to 0 allows an application to read messages that are already fully available, before any preceding messages that may have some packets missing. ¶

4.2.2. Buffer Mode

Buffer mode is negotiated during the handshake by setting the STREAM flag of the handshake Extension Message Flags ( Section 3.2.1.1.1 ) to 1. ¶

In this mode, consecutive packets form one continuous stream that can be read with portions of any size. ¶

4.3. Handshake Messages

SRT uses UDP as an underlying connectionless transport protocol. SRT is a connection-oriented protocol. It embraces the concepts of "connection" and "session". Every SRT session starts with the connection phase, where peers exchange configuration parameters and relevant information by the means of SRT handshake control packets. ¶

SRT versions prior to v1.3.0 use version 4 of the handshaking procedure. HS version 5 is used starting from SRT v1.3.0.\ HS version 4 is not described in this specification. SRT implementations MUST support HS version 5, but MAY not support HS v4. ¶

An SRT connection is characterized by the fact that it is: ¶

first engaged by a handshake process, ¶

maintained as long as any packets are being exchanged in a timely manner, and ¶

considered closed when a party receives the appropriate SHUTDOWN command from its peer (connection closed by the foreign host), or when it receives no packets at all for some predefined time (connection broken on timeout). ¶

SRT supports two connection modes: ¶

Caller-Listener, where one side waits for the other to initiate a connection; ¶

Rendezvous, where both sides attempt to initiate a connection. ¶

The handshake is performed between two parties: "Initiator" and "Responder" in the following order: ¶

Initiator starts an extended SRT handshake process and sends appropriate SRT extended handshake requests. ¶

Responder expects the SRT extended handshake requests to be sent by the Initiator and sends SRT extended handshake responses back. ¶

There are three basic types of SRT handshake extensions that are exchanged in the handshake: ¶

Handshake Extension Message exchanges the basic SRT information; ¶

Key Material Exchange exchanges the wrapped stream encryption key (used only if an encryption is requested). ¶

Stream ID extension exchanges some stream-specific information that can be used by the application to identify an incoming stream connection. ¶

The Initiator and Responder roles are assigned depending on the connection mode. ¶

For Caller-Listener connections: the Caller is the Initiator, the Listener is the Responder. For Rendezvous connections: the Initiator and Responder roles are assigned based on the initial data interchange during the handshake. ¶

The Handshake Type field in the Handshake Structure (see Figure 5 ) indicates the handshake message type. ¶

Caller-Listener handshake exchange has the following order of Handshake Types: ¶

Caller to Listener: INDUCTION Request ¶

Listener to Caller: INDUCTION Response (reports cookie) ¶

Caller to Listener: CONCLUSION Request (uses previously returned cookie) ¶

Listener to Caller: CONCLUSION Response (confirms connection established). ¶

Rendezvous handshake exchange has the following order of Handshake Types: ¶

Both peers after starting the connection: WAVEAHAND with a cookie. ¶

After receiving the above message from the peer: CONCLUSION ¶

After receiving the above message from the peer: AGREEMENT. ¶

When a connection process has failed before either party can send the CONCLUSION handshake, the Handshake Type field will contain the appropriate error value for the rejected connection. See the list of error codes in Table 7 . ¶

The specification of the cipher family and block size is decided by the data Sender. When the transmission is bidirectional, this value MUST be agreed upon at the outset because when both are set the Responder wins. For Caller-Listener connections it is reasonable to set this value on the Listener only. In the case of Rendezvous the only reasonable approach is to decide upon the correct value from the different sources and to set it on both parties (note that AES-128 is the default). ¶

4.3.1. Caller-Listener Handshake

This section describes the handshaking process where a Listener is waiting for an incoming Handshake request on a bound UDP port from a Caller. The process has two phases: induction and conclusion. ¶

4.3.1.1. The Induction Phase

The INDUCTION phase serves only to set a cookie on the Listener so that it doesn't allocate resources, thus mitigating a potential DoS attack that might be perpetrated by flooding the Listener with handshake commands. ¶

4.3.1.1.1. The Induction Request

The Caller begins by sending the INDUCTION handshake which contains the following significant fields: ¶

Destination SRT Socket ID: 0. ¶

HS Version: MUST always be 4. ¶

Encryption Field: 0. ¶

Extension Field: 2 ¶

Handshake Type: INDUCTION ¶

SRT Socket ID: SRT Socket ID of the Caller ¶

SYN Cookie: 0. ¶

There MUST be no HS extensions. ¶

The Destination SRT Socket ID of the SRT packet header in this message is 0, which is interpreted as a connection request. ¶

The handshake version number is set to 4 in this initial handshake. This is due to the initial design of SRT that was to be compliant with the UDT protocol [ GHG04b ] on which it is based. ¶

4.3.1.1.2. The Induction Response

The Listener responds with the following: ¶

HS Version: 5. ¶

Encryption Field: Advertised cipher family and block size. ¶

Extension Field: SRT magic code 0x4A17. ¶

SRT Socket ID: Socket ID of the Listener ¶

SYN Cookie: a cookie that is crafted based on host, port and current time with 1 minute accuracy to avoid SYN flooding attack [ RFC4987 ] . ¶

At this point the Listener still does not know if the Caller is SRT or UDT, and it responds with the same set of values regardless of whether the Caller is SRT or UDT. ¶

A legacy UDT party completely ignores the values reported in the HS Version and the Handshake Type field.\ It is, however, interested in the SYN Cookie value, as this must be passed to the next phase. It does interpret these fields, but only in the "conclusion" message. ¶

4.3.1.2. The Conclusion Phase

4.3.1.2.1. the conclusion request.

The SRT caller receives the Induction Response from the SRT listener. The SRT caller MUST check the Induction response from the SRT listener. ¶

If the HS Version value is 5, the response came from SRT, and the handshake version 5 procedure is performed as covered below.\ If the HS Version value is 4, the legacy handshake procedure can be applied if supported. The procedure is deprecated and is not covered here. The caller MAY reject the connection with the SRT_REJ_VERSION reason. In this case there is nothing to send to the SRT listener, as there is no connection established at this point. ¶

The Extension Flags field MUST contain the magic value 0x4A17. If it does not, the connection MUST be rejected with rejection reason SRT_REJ_ROGUE . This is, among other things, a contingency for the case when someone, in an attempt to extend UDT independently, increases the HS Version value to 5 and tries to test it against SRT. In this case there is nothing to send to the SRT listener, as there is no connection established at this point. ¶

If the Encryption Flag field is set to 0 (not advertised), the caller MAY advertise its own cipher and key length. If the induction response already advertises a certain value in the Encryption Flag, the caller MAY accept it or force its own value. It is RECOMMENDED that if a caller will be sending the content, then it SHOULD force its own value. If it expects to receive content from the SRT listener, then is it RECOMMENDED that it accepts the value advertised in the Encryption Flag field. ¶

An alternative behavior MAY be for a caller to take the longer key length in such cases. ¶

TODO: Receiver TSBPD Delay, Sender TSBPD Delay. ¶

The SRT Caller forms a Conclusion Request. The following values of a Handshake packet MUST be set by the compliant Caller: ¶

Handshake Type: CONCLUSION. ¶

SRT Socket ID: Socket ID of the Caller. ¶

SYN Cookie: the Listener's cookie from the induction response. ¶

Encryption Flags: advertised cipher family and block size. ¶

Extension Flags: a set of flags that define the extensions provided in the handshake. ¶

The Handshake Extension Message Section 3.2.1.1 MUST be present in the conclusion response. ¶

4.3.1.2.2. The Conclusion Response

The SRT Listener receives the conclusion request. If the values of the conclusion request are in any way NOT acceptable on the SRT Listener side, the connection MUST be rejected by sending a conclusion response with the Handshake Type field carrying the rejection reason ( Table 7 ). ¶

TODO: latency value. Special value 0. ¶

TODO: Incorrect? The only case when the Listener can have precedence over the Caller is the advertised Cipher Family and Block Size (see Table 2 ) in the Encryption Field of the Handshake. ¶

The value for latency is always agreed to be the greater of those reported by each party. ¶

Destination SRT Socket ID: the SRT Socket ID field value of the previously received conclusion request. ¶

There is no "negotiation" at this point. ¶

4.3.2. Rendezvous Handshake

The Rendezvous process uses a state machine. It is slightly different from UDT Rendezvous handshake [ GHG04b ] , although it is still based on the same message request types. ¶

The states of a party are Waving ("Wave A Hand"), Conclusion and Agreement. The Waving stage is intended to exchange cookie values, perform the cookie contest and deduce the role of each party: initiator or responder. ¶

4.3.2.1. Cookie Contest

The cookie contest is intended to determine the connection role of a peer. When one party's cookie value is greater (with certain conditions, see below) than its peer's, it wins the cookie contest and becomes Initiator (the other party becomes the Responder). ¶

The intent is to let the side with the greater cookie value become the Initiator (the other party becomes the Responder). However, with a special handling of the higher bit of the difference. ¶

4.3.2.2. The Waving State

Both parties start in a Waving state. In the Waving state, the parties wishing to connect -- Bob and Alice -- each send a WAVEAHAND handshake packet with the fields set to the following values: ¶

HS Version: 5 ¶

Type: Extension field: 0, Encryption field: advertised "PBKEYLEN" ¶

Handshake Type: WAVEAHAND ( Table 4 ) ¶

SRT Socket ID: socket ID of the party (HS sender). ¶

SYN Cookie: Created based on host/port and current time. ¶

HS Extensions: none. ¶

Legacy HS Version 4 clients do not look at the HS Version value, whereas HS Version 5 clients can detect version 5. The parties only continue with the HS Version 5 Rendezvous process when HS Version is set to 5 for both. Otherwise the process continues exclusively according to HS Version 4 rules [ GHG04b ] . Implementations MUST support HS Version 5, and MAY not support HS Version 4. ¶

The WAVEAHAND Handshake packet SHOULD not have extensions. ¶

With SRT Handshake Version 5 Rendezvous, both parties create a cookie for a process called the "cookie contest". This is necessary for the assignment of Initiator and Responder roles. Each party generates a cookie value (a 32-bit number) based on the host, port, and current time with 1 minute accuracy. This value is scrambled using an MD5 sum calculation. The cookie values are then compared with one another. ¶

Since it is impossible to have two sockets on the same machine bound to the same NIC and port and operating independently, it is virtually impossible that the parties will generate identical cookies. However, this situation may occur if an application tries to "connect to itself" - that is, either connects to a local IP address, when the socket is bound to INADDR_ANY, or to the same IP address to which the socket was bound. If the cookies are identical (for any reason), the connection will not be made until new, unique cookies are generated (after a delay of up to one minute). In the case of an application "connecting to itself", the cookies will always be identical, and so the connection will never be established. ¶

If there is no response from a peer the WAVEAHAND handshake SHOULD be repeated every 250 ms until a connection timeout expires. The connection timeout value is defined by the implementation. ¶

If a WAVEAHAND packet is received from the peer during a CONCLUSION handshake, the state is transitioned to the Attention state. ¶

4.3.2.3. Conclusion

In the Conclusion state each peer has received and now knows the other's cookie value. Thus each peer can perform the Cookie Contest operation (compare both cookie values according to Section 4.3.2.1 ) and thereby determine its role. The determination of the Handshake Role (Initiator or Responder) is essential for further processing. ¶

Initiator replies with a Conclusion request handshake: ¶

Extension field: appropriate flags. ¶

Encryption field: advertised PBKEYLEN ¶

Required Handshake Extension: HS Extension Message ( Section 3.2.1.1 ) with HS Extension Type SRT_CMD_HSREQ. ¶

Other handshake extensions are allowed. ¶

If encryption is on, the Initiator (Bob) will use either his own cipher family and block size or the one received from Alice (if she has advertised those values). ¶

The Responder responds with a Conclusion or a WAVEAHAND handshake without extensions until it receives the Conclusion Request from the peer: ¶

Extension field: 0. ¶

Encryption field: advertised PBKEYLEN. ¶

Handshake extensions are NOT allowed. ¶

TODO: What to do if WAVEAHAND or AGREEMENT or else is received in this stage? Repeat conclusion response but not more often that every 250 ms. ¶

4.3.2.4. Initiated

Alice receives Bob's CONCLUSION message. While at this point she also performs the "cookie contest" operation, the outcome will be the same. She switches to the "fine" state, and sends: - Version: 5 - Appropriate extension flags and encryption flags - Handshake Type: CONCLUSION ¶

Both parties always send extension flags at this point, which will contain HSREQ if the message comes from an Initiator, or HSRSP if it comes from a Responder. If the Initiator has received a previous message from the Responder containing an advertised cipher family and block size in the encryption flags field, it will be used as the key length for key generation sent next in the KMREQ extension. ¶

4.3.2.5. Serial Handshake Flow

In the serial handshake flow, one party is always first, and the other follows. That is, while both parties are repeatedly sending WAVEAHAND messages, at some point one party - let's say Alice - will find she has received a WAVEAHAND message before she can send her next one, so she sends a CONCLUSION message in response. Meantime, Bob (Alice's peer) has missed Alice's WAVEAHAND messages, so that Alice's CONCLUSION is the first message Bob has received from her. ¶

This process can be described easily as a series of exchanges between the first and following parties (Alice and Bob, respectively): ¶

Initially, both parties are in the waving state. Alice sends a handshake message to Bob: ¶

Version: 5 ¶

Handshake Type: WAVEAHAND ¶

SRT Socket ID: Alice's socket ID ¶

While Alice does not yet know if she is sending this message to a Version 4 or Version 5 peer, the values from these fields would not be interpreted by the Version 4 peer when the Handshake Type is WAVEAHAND. ¶

Bob receives Alice's WAVEAHAND message, switches to the "attention" state. Since Bob now knows Alice's cookie, he performs a "cookie contest" (compares both cookie values). If Bob's cookie is greater than Alice's, he will become the Initiator. Otherwise, he will become the Responder. ¶

The resolution of the Handshake Role (Initiator or Responder) is essential for further processing. ¶

Then Bob responds: ¶

Extension field: appropriate flags if Initiator, otherwise 0 ¶

If Bob is the Initiator and encryption is on, he will use either his own cipher family and block size or the one received from Alice (if she has advertised those values). ¶

Alice receives Bob's CONCLUSION message. While at this point she also performs the "cookie contest", the outcome will be the same. She switches to the "fine" state, and sends: ¶

Appropriate extension flags and encryption flags ¶

Bob receives Alice's CONCLUSION message, and then does one of the following (depending on Bob's role): ¶

If Bob is the Initiator (Alice's message contains HSRSP), he: ¶

switches to the "connected" state, and ¶

sends Alice a message with Handshake Type AGREEMENT, but containing no SRT extensions (Extension Flags field should be 0). ¶

If Bob is the Responder (Alice's message contains HSREQ), he: ¶

switches to "initiated" state, ¶

sends Alice a message with Handshake Type CONCLUSION that also contains extensions with HSRSP, and ¶

awaits a confirmation from Alice that she is also connected (preferably by AGREEMENT message). ¶

Alice receives the above message, enters into the "connected" state, and then does one of the following (depending on Alice's role): ¶

If Alice is the Initiator (received CONCLUSION with HSRSP), she sends Bob a message with Handshake Type = AGREEMENT. ¶

If Alice is the Responder, the received message has Handshake Type AGREEMENT and in response she does nothing. ¶

At this point, if Bob was an Initiator, he is connected already. If he was a Responder, he should receive the above AGREEMENT message, after which he switches to the "connected" state. In the case where the UDP packet with the agreement message gets lost, Bob will still enter the "connected" state once he receives anything else from Alice. If Bob is going to send, however, he has to continue sending the same CONCLUSION until he gets the confirmation from Alice. ¶

4.3.2.6. Parallel Handshake Flow

The chances of the parallel handshake flow are very low, but still it may occur if the handshake messages with WAVEAHAND are sent and received by both peers at precisely the same time. ¶

The resulting flow is very much like Bob's behaviour in the serial handshake flow, but for both parties. Alice and Bob will go through the same state transitions: ¶

In the Attention state they know each other's cookies, so they can assign roles. In contrast to serial flows, which are mostly based on request-response cycles, here everything happens completely asynchronously: the state switches upon reception of a particular handshake message with appropriate contents (the Initiator MUST attach the HSREQ extension, and Responder MUST attach the HSRSP extension). ¶

Here is how the parallel handshake flow works, based on roles and states: ¶

(1) Initiator ¶

Receives WAVEAHAND message, ¶

Switches to Attention, ¶

Sends CONCLUSION + HSREQ. ¶

Attention ¶

Receives CONCLUSION message which ¶

either contains no extensions, then switches to Initiated, still sends CONCLUSION + HSREQ; or ¶

contains HSRSP extension, then switches to Connected, sends AGREEMENT. ¶

Initiated ¶

Receives CONCLUSION message, which ¶

either contains no extensions, then REMAINS IN THIS STATE, still sends CONCLUSION + HSREQ; or ¶

Connected ¶

May receive CONCLUSION and respond with AGREEMENT, but normally by now it should already have received payload packets. ¶

(2) Responder ¶

Sends CONCLUSION message (with no extensions). ¶

Receives CONCLUSION message with HSREQ. This message might contain no extensions, in which case the party SHALL simply send the empty CONCLUSION message, as before, and remain in this state. ¶

Switches to Initiated and sends CONCLUSION message with HSRSP. ¶

Receives: ¶

CONCLUSION message with HSREQ, then responds with CONCLUSION with HSRSP and remains in this state; ¶

AGREEMENT message, then responds with AGREEMENT and switches to Connected; ¶

Payload packet, then responds with AGREEMENT and switches to Connected. ¶

Is not expecting to receive any handshake messages anymore. The AGREEMENT message is always sent only once or per every final CONCLUSION message. ¶

Note that any of these packets may be missing, and the sending party will never become aware. The missing packet problem is resolved this way: ¶

If the Responder misses the CONCLUSION + HSREQ message, it simply continues sending empty CONCLUSION messages. Only upon reception of CONCLUSION + HSREQ it does respond with CONCLUSION + HSRSP. ¶

If the Initiator misses the CONCLUSION + HSRSP response from the Responder, it continues sending CONCLUSION + HSREQ. The Responder MUST always respond with CONCLUSION + HSRSP when the Initiator sends CONCLUSION + HSREQ, even if it has already received and interpreted it. ¶

When the Initiator switches to the Connected state it responds with a AGREEMENT message, which may be missed by the Responder. Nonetheless, the Initiator may start sending data packets because it considers itself connected - it does not know that the Responder has not yet switched to the Connected state. Therefore it is exceptionally allowed that when the Responder is in the Initiated state and receives a data packet (or any control packet that is normally sent only between connected parties) over this connection, it may switch to the Connected state just as if it had received a AGREEMENT message. ¶

If the the Initiator has already switched to the Connected state it will not bother the Responder with any more handshake messages. But the Responder may be completely unaware of that (having missed the AGREEMENT message from the Initiator). Therefore it does not exit the connecting state, which means that it continues sending CONCLUSION + HSRSP messages until it receives any packet that will make it switch to the Connected state (normally AGREEMENT). Only then does it exit the connecting state and the application can start transmission. ¶

4.4. SRT Buffer Latency

The SRT sender and receiver have buffers to store packets. ¶

On the sender, latency is the time that SRT holds a packet to give it a chance to be delivered successfully while maintaining the rate of the sender at the receiver. If an acknowledgment (ACK) is missing or late for more than the configured latency, the packet is dropped from the sender buffer. A packet can be retransmitted as long as it remains in the buffer for the duration of the latency window. On the receiver, packets are delivered to an application from a buffer after the latency interval has passed. This helps to recover from potential packet losses. See Section 4.5 , Section 4.6 for details. ¶

Latency is a value, in milliseconds, that can cover the time to transmit hundreds or even thousands of packets at high bitrate. Latency can be thought of as a window that slides over time, during which a number of activities take place, such as the reporting of acknowledged packets (ACKs) ( Section 4.8.1 ) and unacknowledged packets (NAKs) ( Section 4.8.2 ). ¶

Latency is configured through the exchange of capabilities during the extended handshake process between initiator and responder. The Handshake Extension Message ( Section 3.2.1.1 ) has TSBPD delay information, in milliseconds, from the SRT receiver and sender. The latency for a connection will be established as the maximum value of latencies proposed by the initiator and responder. ¶

4.5. Timestamp-Based Packet Delivery

The goal of the SRT Timestamp-Based Packet Delivery (TSBPD) mechanism is to reproduce the output of the sending application (e.g., encoder) at the input of the receiving application (e.g., decoder) in the case of live streaming ( Section 4.2 , Section 7.1 ). It attempts to reproduce the timing of packets committed by the sending application to the SRT sender. This allows packets to be scheduled for delivery by the SRT receiver, making them ready to be read by the receiving application (see Figure 21 ). ¶

The SRT receiver, using the timestamp of the SRT data packet header, delivers packets to a receiving application with a fixed minimum delay from the time the packet was scheduled for sending on the SRT sender side. Basically, the sender timestamp in the received packet is adjusted to the receiver’s local time (compensating for the time drift or different time zones) before releasing the packet to the application. Packets can be withheld by the SRT receiver for a configured receiver delay. A higher delay can accommodate a larger uniform packet drop rate, or a larger packet burst drop. Packets received after their "play time" are dropped if the Too-Late Packet Drop feature is enabled ( Section 4.6 ). For example, in the case of live video streaming, TSBPD and Too-Late Packet Drop mechanisms allow to intentionally drop those packets that were lost and have no chance to be retransmitted before their play time. Thus, SRT provides a fixed end-to-end latency of the stream. ¶

The packet timestamp, in microseconds, is relative to the SRT connection creation time. Packets are inserted based on the sequence number in the header field. The origin time, in microseconds, of the packet is already sampled when a packet is first submitted by the application to the SRT sender unless explicitly provided. The TSBPD feature uses this time to stamp the packet for first transmission and any subsequent retransmission. This timestamp and the configured SRT latency ( Section 4.4 ) control the recovery buffer size and the instant that packets are delivered at the destination (the aforementioned "play time" which is decided by adding the timestamp to the configured latency). ¶

It is worth mentioning that the use of the packet sending time to stamp the packets is inappropriate for the TSBPD feature, since a new time (current sending time) is used for retransmitted packets, putting them out of order when inserted at their proper place in the stream. ¶

Figure 21 illustrates the key latency points during the packet transmission with the TSBPD feature enabled. ¶

The main packet states shown in Figure 21 are the following: ¶

"Scheduled for sending": the packet is committed by the sending application, stamped and ready to be sent; ¶

"Sent": the packet is passed to the UDP socket and sent; ¶

"Received": the packet is received and read from the UDP socket; ¶

"Scheduled for delivery": the packet is scheduled for the delivery and ready to be read by the receiving application. ¶

It is worth noting that the round-trip time (RTT) of an SRT link may vary in time. However the actual end-to-end latency on the link becomes fixed and is approximately equal to (RTT_0/2 + SRT Latency) once the SRT handshake exchange happens, where RTT_0 is the actual value of the round-trip time during the SRT handshake exchange (the value of the round-trip time once the SRT connection has been established). ¶

The value of sending delay depends on the hardware performance. Usually it is relatively small (several microseconds) in contrast to RTT_0/2 and SRT latency which are measured in milliseconds. ¶

4.5.1. Packet Delivery Time

Packet delivery time is the moment, estimated by the receiver, when a packet should be delivered to the upstream application. The calculation of packet delivery time (PktTsbpdTime) is performed upon receiving a data packet according to the following formula: ¶

TsbpdTimeBase is the time base that reflects the time difference between local clock of the receiver and the clock used by the sender to timestamp packets being sent (see Section 4.5.1.1 ); ¶

PKT_TIMESTAMP is the data packet timestamp, in microseconds; ¶

TsbpdDelay is the receiver’s buffer delay (or receiver’s buffer latency, or SRT Latency). This is the time, in milliseconds, that SRT holds a packet from the moment it has been received till the time it should be delivered to the upstream application; ¶

Drift is the time drift used to adjust the fluctuations between sender and receiver clock, in microseconds. ¶

SRT Latency (TsbpdDelay) should be a buffer time large enough to cover the unexpectedly extended RTT time, and the time needed to retransmit the lost packet. The value of minimum TsbpdDelay is negotiated during the SRT handshake exchange and is equal to 120 milliseconds. The recommended value of TsbpdDelay is 3-4 times RTT. ¶

It is worth noting that TsbpdDelay limits the number of packet retransmissions to a certain extent making it impossible to retransmit packets endlessly. This is important for the case of live streaming ( Section 4.2 , Section 7.1 ). ¶

4.5.1.1. TSBPD Time Base Calculation

The initial value of TSBPD time base (TsbpdTimeBase) is calculated at the moment of the second handshake request is received as follows: ¶

where T_NOW is the current time according to the receiver clock; HSREQ_TIMESTAMP is the handshake packet timestamp, in microseconds. ¶

The value of TsbpdTimeBase is approximately equal to the initial one-way delay of the link RTT_0/2, where RTT_0 is the actual value of the round-trip time during the SRT handshake exchange. ¶

During the transmission process, the value of TSBPD time base may be adjusted in two cases: ¶

During the TSBPD wrapping period. The TSBPD wrapping period happens every 01:11:35 hours. This time corresponds to the maximum timestamp value of a packet (MAX_TIMESTAMP). MAX_TIMESTAMP is equal to 0xFFFFFFFF, or the maximum value of 32-bit unsigned integer, in microseconds ( Section 3 ). The TSBPD wrapping period starts 30 seconds before reaching the maximum timestamp value of a packet and ends once the packet with timestamp within (30, 60) seconds interval is delivered (read from the buffer). The updated value of TsbpdTimeBase will be recalculated as follows: ¶

By drift tracer. See Section 4.7 for details. ¶

4.6. Too-Late Packet Drop

The Too-Late Packet Drop (TLPKTDROP) mechanism allows the sender to drop packets that have no chance to be delivered in time, and allows the receiver to skip missing packets that have not been delivered in time. The timeout of dropping a packet is based on the TSBPD mechanism ( Section 4.5 ). ¶

When the TLPKTDROP mechanism is enabled, a packet is considered "too late" to be delivered and may be dropped by the sender if the packet timestamp is older than TLPKTDROP_THRESHOLD. ¶

TLPKTDROP_THRESHOLD is related to SRT latency ( Section 4.4 ). For the Too-Late Packet Drop mechanism to function effectively, it is recommended that a value higher than the SRT latency is used. This will allow the SRT receiver to drop missing packets first while the sender drops packets if a proper response is not received from the peer in time (e.g., due to severe congestion). The recommended threshold value is 1.25 times the SRT latency value. ¶

Note that the SRT sender keeps packets for at least 1 second in case the latency is not high enough for a large RTT (that is, if TLPKTDROP_THRESHOLD is less than 1 second). ¶

When enabled on the receiver, the receiver drops packets that have not been delivered or retransmitted in time, and delivers the subsequent packets to the application when it is their time to play. ¶

In pseudo-code, the algorithm of reading from the receiver buffer is the following: ¶

where T_NOW is the current time according to the receiver clock. ¶

When a receiver encounters the situation where the next packet to be played was not successfully received from the sender, the receiver will "skip" this packet and send a fake ACK packet ( Section 4.8.1 ). To the sender, this fake ACK is a real ACK, and so it just behaves as if the packet had been received. This facilitates the synchronization between SRT sender and receiver. The fact that a packet was skipped remains unknown by the sender. It is recommended that skipped packets are recorded in the statistics on the SRT receiver. ¶

The TLPKTDROP mechanism can be turned off to always ensure a clean delivery. However, a lost packet can simply pause a delivery for some longer, potentially undefined time, and cause even worse tearing for the player. Setting SRT latency higher will help much more in the event that TLPKTDROP causes packet drops too often. ¶

4.7. Drift Management

When the sender enters "connected" status it tells the application there is a socket interface that is transmitter-ready. At this point the application can start sending data packets. It adds packets to the SRT sender's buffer at a certain input rate, from which they are transmitted to the receiver at scheduled times. ¶

A synchronized time is required to keep proper sender/receiver buffer levels, taking into account the time zone and round-trip time (up to 2 seconds for satellite links). Considering addition/subtraction round-off, and possibly unsynchronized system times, an agreed-upon time base drifts by a few microseconds every minute. The drift may accumulate over many days to a point where the sender or receiver buffers will overflow or deplete, seriously affecting the quality of the video. SRT has a time management mechanism to compensate for this drift. ¶

When a packet is received, SRT determines the difference between the time it was expected and its timestamp. The timestamp is calculated on the receiver side. The RTT tells the receiver how much time it was supposed to take. SRT maintains a reference between the time at the leading edge of the send buffer's latency window and the corresponding time on the receiver (the present time). This allows to convert packet timestamp to the local receiver time. Based on this time, various events (packet delivery, etc.) can be scheduled. ¶

The receiver samples time drift data and periodically calculates a packet timestamp correction factor, which is applied to each data packet received by adjusting the inter-packet interval. When a packet is received it is not given right away to the application. As time advances, the receiver knows the expected time for any missing or dropped packet, and can use this information to fill any "holes" in the receive queue with another packet (see Section 4.5 ). ¶

It is worth noting that the period of sampling time drift data is based on a number of packets rather than time duration to ensure enough samples, independently of the media stream packet rate. The effect of network jitter on the estimated time drift is attenuated by using a large number of samples. The actual time drift being very slow (affecting a stream only after many hours) does not require a fast reaction. ¶

The receiver uses local time to be able to schedule events — to determine, for example, if it is time to deliver a certain packet right away. The timestamps in the packets themselves are just references to the beginning of the session. When a packet is received (with a timestamp from the sender), the receiver makes a reference to the beginning of the session to recalculate its timestamp. The start time is derived from the local time at the moment that the session is connected. A packet timestamp equals "now" minus "StartTime", where the latter is the point in time when the socket was created. ¶

4.8. Acknowledgement and Lost Packet Handling

To enable the Automatic Repeat reQuest of data packet retransmissions, a sender stores all sent data packets in its buffer. ¶

The SRT receiver periodically sends acknowledgments (ACKs) for the received data packets so that the SRT sender can remove the acknowledged packets from its buffer ( Section 4.8.1 ). Once the acknowledged packets are removed, their retransmission is no longer possible and presumably not needed. ¶

Upon receiving the full acknowledgment (ACK) control packet, the SRT sender SHOULD acknowledge its reception to the receiver by sending an ACKACK control packet with the sequence number of the full ACK packet being acknowledged. ¶

The SRT receiver also sends NAK control packets to notify the sender about the missing packets ( Section 4.8.2 ). The sending of a NAK packet can be triggered immediately after a gap in sequence numbers of data packets is detected. In addition, a Periodic NAK report mechanism can be used to send NAK reports periodically. The NAK packet in that case will list all the packets that the receiver considers being lost up to the moment the Periodic NAK report is sent. ¶

Upon reception of the NAK packet, the SRT sender prioritizes retransmissions of lost packets over the regular data packets to be transmitted for the first time. ¶

The retransmission of the missing packet is repeated until the receiver acknowledges its receipt, or if both peers agree to drop this packet ( Section 4.6 ). ¶