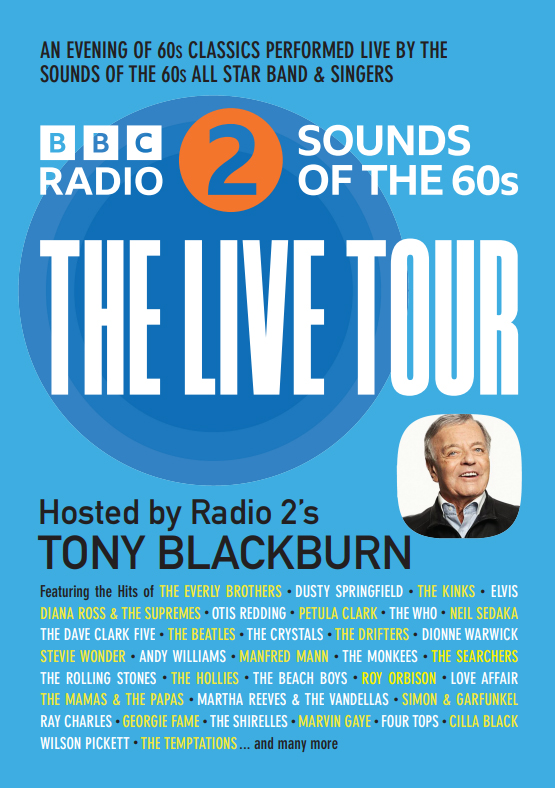

Sounds of the 60’s Live Tour 2023 – Hosted by Tony Blackburn

Every week, listeners across the UK tune into BBC Radio 2 to hear the legendary Tony Blackburn play the greatest songs of the 60’s. Now, the legendary DJ will be taking his Sounds of the 60’s show on the road, for a UK tour celebrating the music of this much-loved decade.

Featuring stories and songs from perhaps the most iconic 10 years in music, fashion and popular culture, the Sounds of the 60s tour will bring to life memories of the era.

The Sounds of the 60s All-Star Band and Singers will perform classic hits from the likes of Diana Ross & The Supremes, The Everly Brothers, Elvis, The Beatles, The Rolling Stones, The Beach Boys, The Drifters, Stevie Wonder, Marvin Gaye, and many more.

With next year marking the 60th anniversary of his first broadcast on pirate radio station Radio Caroline South in 1964, Tony Blackburn is remembered as a defining voice of the 1960s. His incredible career has seen him present on BBC Radio 1, Top of the Pops, and since 2017 he has presented Sounds of the 60’s on Radio 2, a hugely popular show on BBC Radio.

A champion in particular of Soul music throughout the 60’s, Tony has been recognised with 37 awards to his name including two Gold Awards for Outstanding Contribution to Radio, becoming the first person in history to receive two of these lifetime achievement awards. Tony is also recognised for becoming the first King of the Jungle in the original series of ITV’s I’m A Celebrity – Get Me Out Of Here.

Tickets available now from Ticketmaster and venue box offices

Tour Dates 11th January Brighton Theatre Royal 12th January Hayes Beck Theatre 18th January Northampton Royal & Derngate 24th January Wellingborough Castle Theatre 25th January King’s Lynn Corn Exchange 26th January Guildford G Live 31st January Bury St Edmunds The Apex 1st February Wolverhampton Grand Theatre 2nd February New Brighton Floral Pavilion 8th February Blackburn King George’s Hall 9th February Warrington Parr Hall 14th February Weston-Super-Mare Playhouse Theatre 16th February Hoddesdon Spotlight 21st February Hastings White Rock Theatre 23rd February Cheltenham Town Hall 28th February Manchester Opera House

1st March Dorking Halls 2nd March Yeovil Westlands 7th March Christchurch Regent Centre 14th March Milton Keynes Theatre 21st March Buxton Opera House 22nd March York Barbican 27th March Richmond New Theatre 28th March Woking New Victoria Theatre 19th April Swindon Wyvern Theatre 20th April Redhill Harlequin Theatre 24th April ` Bromley Churchill Theatre 26th April Folkestone Leas Cliffs Hall 27th April Chatham Central Theatre

18th May Eastbourne Royal Hippodrome Theatre 23rd Mary Birmingham Town Hall 24th May Liverpool Empire 25th May Newcastle O2 City Hall

7th June High Wycombe Swan 15th June Dartford The Orchard 29th June Southend Cliffs Pavilion

Forgot password

Inquiry for sounds of the 60's live tour 2023 - hosted by tony blackburn.

Sounds of the Sixties Verified

Concerts and tour dates, fan reviews.

Fans Also Follow

About sounds of the sixties.

- News & Reviews

- Tours & Tickets

- Advanced Search

- Most Popular

- Enhanced Performances

- Largest tours

- Panto/Christmas Shows

- UKTW News/Reviews

- Newsletters

- Latest Information

Tony Blackburn - Sounds of the 60s

Tour synopsis, cast & creatives.

Cast/Performers

Tour details for tony blackburn - sounds of the 60s 2020 to 2024, dates for tony blackburn - sounds of the 60s (concert) tour, qr/qtix links.

Embed Information

Tellme notifications.

User Reviews

Add a review.

Sounds Of The 60s All Star Band & Singers

Follow Sounds Of The 60s All Star Band & Singers on Ents24 to receive updates on any new tour dates the moment they are announced...

- Be the first to know about new tour dates

- Alerts are free and always will be

- We hate spam and will never share your email address with anyone else

- More than a million fans already rely on Ents24 to follow their favourite artists and venues

Past Events

Here are the most recent UK tour dates we had listed for Sounds Of The 60s All Star Band & Singers. Were you there?

September 2022

- Sat 10 Sep Bath, The Forum Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Tue 26 Jul Aylesbury Waterside Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 23 Jul Sheffield City Hall and Memorial Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers

- Sat 18 Jun Hull City Hall Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 16 Jun Redditch, Palace Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 23 Apr Crawley, The Hawth Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

February 2022

- Wed 9 Feb Margate Winter Gardens Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

November 2021

- Thu 18 Nov Bolton, The Albert Halls Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Wed 3 Nov Ipswich, Regent Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

October 2021

- Thu 28 Oct Warrington, Pyramid & Parr Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 23 Oct Dorking Halls Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Tue 19 Oct Wellingborough, Castle Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 14 Oct Margate Winter Gardens Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 7 Oct Cardiff, St David's Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

August 2021

- Sat 21 Aug Sheffield City Hall and Memorial Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers

- Thu 12 Aug Southend-on-Sea, Cliffs Pavilion Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 17 Jul Aylesbury Waterside Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 19 Jun Bath, The Forum Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 17 Jun Redditch, Palace Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 12 Jun Hull City Hall Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 13 May Crawley, The Hawth Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 18 Mar Ipswich, Regent Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 13 Mar Sheffield City Hall and Memorial Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 6 Mar Bolton, The Albert Halls Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

February 2021

- Tue 23 Feb Wellingborough, Castle Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 13 Feb Warrington, Pyramid & Parr Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Wed 10 Feb Cardiff, St David's Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 6 Feb Dorking Halls Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

January 2021

- Thu 28 Jan Margate Winter Gardens Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

October 2020

- Sat 10 Oct Redditch, Palace Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

September 2020

- Sat 26 Sep Hull City Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 5 Sep Southend-on-Sea, Cliffs Pavilion Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 27 Jun Bath, The Forum Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 20 Jun Redditch, Palace Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 28 May Crawley, The Hawth Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 23 May Cardiff, St David's Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 16 May Watford Colosseum Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 9 May Dorking Halls Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 25 Apr Sheffield City Hall and Memorial Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 23 Apr Ipswich, Regent Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 18 Apr Wellingborough, Castle Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 11 Apr Bolton, The Albert Halls Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 28 Mar Margate Winter Gardens Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 21 Mar Warrington, Pyramid & Parr Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 14 Mar Dudley Town Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 7 Mar Cannock, Prince of Wales Centre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sun 1 Mar Northampton, Royal & Derngate Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

February 2020

- Thu 13 Feb Leamington Spa, Royal Spa Centre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 8 Feb Westlands Yeovil Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 1 Feb Hayes, Beck Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

January 2020

- Thu 30 Jan Basingstoke, Anvil Arts Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 25 Jan Guildford, G Live Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 18 Jan Leicester, De Montfort Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 16 Jan Bradford, St George's Hall Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 11 Jan Swindon, Wyvern Theatre Sounds Of The 60s Live Sounds Of The 60s All Star Band & Singers, Tony Blackburn

November 2019

- Thu 28 Nov Wolverhampton Grand Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

October 2019

- Sun 20 Oct Christchurch, The Regent Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sun 6 Oct Chatham, The Central Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

September 2019

- Sat 21 Sep Redhill, Harlequin Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Thu 19 Sep St. Albans, The Alban Arena Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sun 28 Jul Weston-super-Mare, The Playhouse Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sun 30 Jun Bromley, Churchill Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 22 Jun Horsham, The Capitol Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sun 16 Jun Eastbourne, Royal Hippodrome Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 11 May High Wycombe, Swan Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 4 May Dunstable, Grove Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 27 Apr Dartford, Orchard Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Tue 2 Apr New Brighton, Floral Pavilion Theatre & Blue Lounge Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 30 Mar Hastings, The White Rock Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sun 10 Mar Harlow Playhouse Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 2 Mar Clacton-On-Sea, Princes Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

February 2019

- Sat 23 Feb Eastleigh, The Concorde Club & Ellington Lodge Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Sat 2 Feb Hunstanton, Princess Theatre Sounds Of The 60s All Star Band & Singers, Tony Blackburn

- Wed 14 Mar Grays, Thameside Theatre Sounds Of The 60's

November 2017

- Sat 18 Nov Bradford, Idle & Thackley Conservative Club Beatles For Sale, Sounds Of The 60's, Dj Flashback

- Fri 8 Jul The RoadHouse Birmingham Sounds Of The 60's

- Fri 6 Mar Liverpool, The Green Room Sounds Of The 60's

August 2012

- Fri 17 Aug North Shields, Magnesia Bank Sounds Of The 60's

November 2011

- Fri 4 Nov North Shields, Magnesia Bank Sounds Of The 60's

RECENT POSTS

Top-selling albums of 1980: a new decade, elvis presley ‘memphis’ box: definitive collection of hometown recordings, when glenn frey came to small-town minnesota, bravo paul mccartney & wings’ 1974 ‘one hand clapping’ live album is finally released.

- Iron Butterfly’s Classic ‘In-a-Gadda-Da-Vida’

- Mike Campbell in 2024: Album, Tour Featuring the Heartbreakers’ Steve Ferrone

- ‘Brats’ Film Review: Andrew McCarthy Revisits The Brat Pack

- Sly Stone Documentary is Being Finalized: Questlove

- Stevie Ray Vaughan and Double Trouble’s ‘Texas Flood’: Out of the Gate

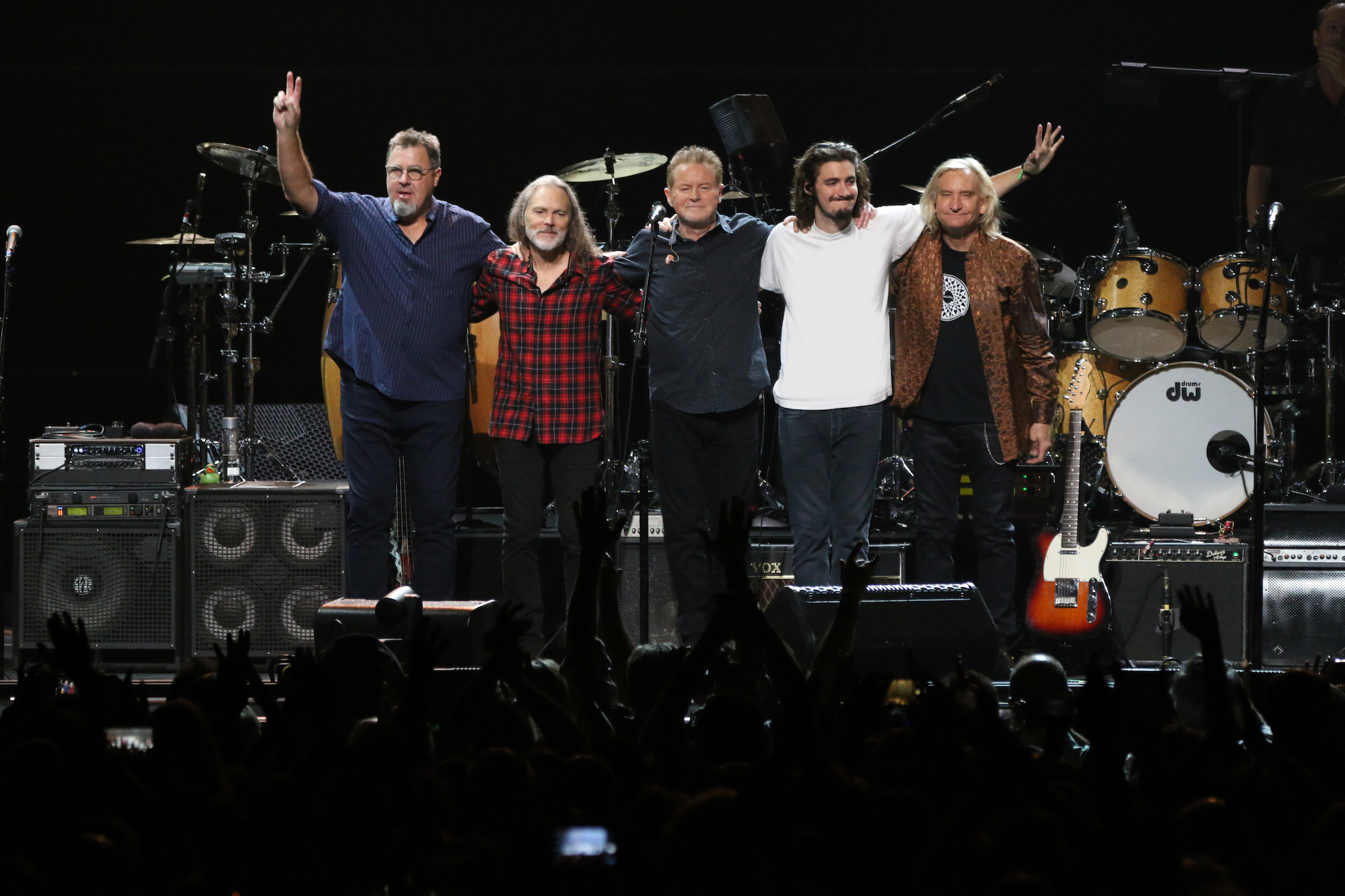

Eagles Announce Sphere Residency in Las Vegas

- Jethro Tull Shares ‘Aqualung’ From Expanded 1978 Live Album, ‘Bursting Out’

- Van Halen Shares 2nd Previously Unreleased Performance From ‘For Unlawful Carnal Knowledge’ Expanded Edition

- Oasis ‘Definitely Maybe’ Gets 30th Anniversary Edition

- ‘Blow by Blow’: A Jeff Beck Master Class in Guitar

- Best Classic Bands: It’s Burton Cummings’ Fault

- Blondie Co-Founder Chris Stein Publishes Memoir

- Judas Priest: Busy 2024 Continues With More ‘Invisible Shield’ Tour Dates

- The Police ‘Synchronicity’ Gets 40th Anniversary Super Deluxe

- Asia, Focus Set For ‘Heat of the Moment’ 2024 Tour

- Mark James, Songwriter of ‘Suspicious Minds’ and Other Hits, Dies

LATEST REVIEWS

- Supertramp’s ‘Breakfast in America’

- Bob Seger – Final Tour

- Janis Joplin Biography Review

- CSNY’s ‘Deja Vu’

- Rolling Stones – 2019 Concert Review

- Eric Clapton Celebrates at MSG

- Roger Waters ‘Us + Them’ Tour

- Warren Zevon’s ‘Excitable Boy’

- Tom Petty 40th Anniversary Concert

- 1971: Year That Rock Exploded – Book

- Steppenwolf’s Debut: Heavy Metal Thunder

- ‘Who’s Next’ – Album Rewind

- Privacy Policy

Eagles’ Vince Gill, Timothy B. Schmit, Don Henley, Deacon Frey and Joe Walsh (Photo: Ron Koch; used with permission)

Eagles have announced a residency at Sphere in Las Vegas featuring eight shows over four exclusive weekends from Friday, September 20 through Saturday, October 19. The Sphere residency will offer fans the ultimate connection to the band’s legendary catalog in an immersive experience that only Sphere can provide. The general on-sale will begin June 21 at 10 AM PT here . Tickets start at $175 and will reflect all-in pricing, meaning the ticket price listed is inclusive of taxes and fees.

To ensure that tickets get directly into the hands of fans, advance Artist presale registration is available now at https://eagles.com powered by Seated. The artist presale begins June 18 at 10 a.m. PT. A Live Nation presale begins June 20 at 10 a.m. PT here .

These shows will utilize Sphere’s next-generation technologies, including the world’s highest resolution LED display that wraps up, over and around the audience, creating a fully immersive visual environment. The venue also features the world’s most advanced concert-grade audio system, Sphere Immersive Sound, powered by HOLOPLOT, which delivers audio with unmatched clarity and precision to every guest.

The months-long rumor of the band performing at the state-of-the-art facility at the Venetian Resort heated up on May 10 when USA Today reported on a comment made by James Dolan, the CEO of Sphere Entertainment, during a quarterly earnings call. The venue opened in September 2023 with a residency by U2. The “U2:UV Actung Baby Live at Sphere” run was eventually expanded to 40 dates at the arena-sized facility, ending on March 2, 2024.

In its reporting, USA Today noted that neither Sphere Entertainment Co. nor Eagles would comment. The band and their longtime manager, Irving Azoff, have a close relationship with Dolan.

Eagles at Sphere in Las Vegas (Tickets will be available here ) Sep 20-21 Sep 27-28 Oct 11-12 Oct 18-19

Since U2’s performances, Phish played four dates in April. And Dead & Co. have announced dozens of concerts to take place in May, June and July that will feature never-before-seen visual storytelling from the band, providing fans with the ultimate connection to the music through these innovative and immersive shows. [Each weekend’s three performances will feature a unique setlist. Tickets for the Dead & Co. shows are available here .]

“Every time an act books the Sphere, they have to create content around it,” USA Today quoted Dolan. “We will never have an act play the Sphere that doesn’t have something compelling on the screen … I think you’re going to find (with Dead & Company) that even if you’re not a Deadhead, you’re going to love the show. And I think the same will be true for the Eagles and the next acts we bring on.”

On July 6, 2023, Eagles announced that “The Long Goodbye” would be their final tour. The band—Don Henley, Joe Walsh, Timothy B. Schmit, with Vince Gill and Deacon Frey—announced they will perform as many shows in each market as their audience demands. After an extended break, they’re scheduled to resume with the first overseas dates of the tour on May 31, with multiple shows in Manchester U.K. and Arnhem, Netherlands.

Related: Links to 100s of current classic rock tours

- Latest Posts

- Top-Selling Albums of 1980: A New Decade - 06/14/2024

- Elvis Presley ‘Memphis’ Box: Definitive Collection of Hometown Recordings - 06/14/2024

- Bravo! Paul McCartney & Wings’ 1974 ‘One Hand Clapping’ Live Album is Finally Released - 06/14/2024

Stories We Want You to Read

No Comments so far

No comments yet, click here to cancel reply..

Your data will be safe! Your e-mail address will not be published. Also other data will not be shared with third person.

Comment * -->

This site uses Akismet to reduce spam. Learn how your comment data is processed .

Loading, Please Wait!

In the midst of life I woke to find myself living in an old house beside Brick Lane in the East End of London

Moyra peralta at crispin st night shelter.

Next tickets for THE GENTLE AUTHOR’S TOUR OF SPITALFIELDS are available for Saturday 22nd June

Remembering photographer Moyra Peralta (1936-2024) who died on 8th May aged eighty-eight

“I am standing in the one-time women’s dormitory and have brought a photograph of my friend Peggy. Her husband had died and she could not bear to remain alone in her home surrounded by thoughts of him. Chance, desperation and loss brought many people to Providence Row, myself included, and its existence was a lifeline – a refuge from the ruthlessness of life.”

Providence Row, the night shelter for destitute men, women and children in Crispin St, opened in 1860 and operated until 2002 when it moved to new premises in Wentworth St, where it continues now as a day centre. Twenty years on, photographer Moyra Peralta, who worked at Providence Row in the seventies and eighties, returned to have a final look at the familiar rooms that had seen so much life and she took these evocative pictures published here for the first time.

Reconstructed and expanded to create an uneasy architectural hybrid, the building is now student housing for the London School of Economics, where once it housed Students of the London School of the Economics of Pennilessness. Famously, this was where James Mason came to interview those dignified gentlemen down on their luck in ‘The London Nobody Knows.’

Over one one hundred and forty years, Providence Row offered refuge to the poorest and most vulnerable of Londoners and, at the last moment before the building was gutted, Moyra went in search of the residue of their hope and despair, their yearning and their loneliness. She found a sacred space resonant with echoes of the past and graven with the tell-tale marks of those who had passed through.

Memorial plaque to the opening of Providence Row in 1860

The yard where Roman skeletal remains were excavated

Looking towards the City of London

HE WHO OPENS THIS DOOR SHALL BE CURSED FOR A HUNDRED AND ONE YEARS

Former women’s dormitory

Women’s dormitory in the sixties

This free-standing disconnected facade is still to be seen in Artillery Lane

“I am struck by the notion that with a careless step or two, I too might meet a premature end as I circumnavigate holes in floors and gaping apertures in walls.”

The room where Moyra Peralta slept when she worked at Providence Row and where she wrote these words – “Only the present is real – for some reason I feel this most of all when listening to the lorries moving at the street’s end and the slamming of crates being unloaded in Crispin St. There is a rhythm to the deep sound of the slow low-thrumming engines that I like to contemplate. On sleep-over, rising early from my bed following the refuge nightshift, I watch what is now – 6:00am. A thousand cameos change and regroup under my gaze. Jammed traffic forms and reforms where the roads meet.”

Photographs copyright © Estate of Moyra Peralta

You may also like to read these other stories about the Crispin St Night Shelter

The Return of Vicky Moses

The Doss Houses of Spitalfields

Down Among the Meths Men

and see Moyra Peralta’s other work

Moyra Peralta in Spitalfields

Moyra Peralta’s Street Portraits

from → Past Life , Photo Life

Leave a Reply

Name: (required):

Email: (required):

Note: Comments may be edited. Your email address will never be published.

Subscribe to this comment feed via RSS

There are more than 5,000 stories by The Gentle Author with 43,000 pictures to be found in the categories and archives on this site

Spitalfields Life daily email

Twitter & Instagram

@thegentleauthor

- Animal Life

- Criminal Life

- Culinary Life

- Cultural Life

- Literary Life

- Market Life

- Spiritual Life

- Street Life

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- February 2017

- January 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- August 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- February 2014

- January 2014

- December 2013

- November 2013

- October 2013

- September 2013

- August 2013

- February 2013

- January 2013

- December 2012

- November 2012

- October 2012

- September 2012

- August 2012

- February 2012

- January 2012

- December 2011

- November 2011

- October 2011

- September 2011

- August 2011

- February 2011

- January 2011

- December 2010

- November 2010

- October 2010

- September 2010

- August 2010

- February 2010

- January 2010

- December 2009

- November 2009

- October 2009

- September 2009

- August 2009

© Spitalfields Life Books Ltd 2009-24

Unauthorized use or duplication of these words and pictures without written permission is strictly prohibited. Excerpts and links may be used, provided that full and clear credit is given to Spitalfields Life with appropriate and specific direction to the original content.

- Advertisement

Spitalfields Life is published daily by Spitalfields Life Books Ltd

Françoise Hardy, French Singer and Actor Who Became International Icon in 1960s, Dies at 80

By Chris Willman

Chris Willman

Senior Music Writer and Chief Music Critic

- Taylor Swift Confirms Eras Tour Won’t Get Any More Extensions: ‘This Tour Is Going to End in December — That’s It’ 1 day ago

- The Black Opry, Nashville-Based Collective, Launches Its Own Label With Debut Single From Black, Queer Artist Jett Holden 1 day ago

- Mark James, Songwriter of ‘Suspicious Minds,’ ‘Always on My Mind’ and ‘Hooked on a Feeling,’ Dies at 83 2 days ago

Françoise Hardy, a French singer, actor and model whose classical beauty and often melancholy music combined to transfix fans internationally in the 1960s and beyond, has died at age 80.

Her son, Thomas Dutronc, also a musician, reported the death on his Instagram account, posting a baby photo of himself with his mother and writing: “Maman est partie.” Or, mom is gone .

Hardy had battled lymphatic and laryngeal cancer over the last two decades, since first being diagnosed with the former condition in 2004.

Popular on Variety

Related stories, new bundles point to broadband’s growing power in svod packaging, david beckham on the apologies he got after netflix doc exposed 'brutal' treatment, staying ‘grounded’ with victoria and whether the spice girls will ever tour again.

Hardy also became a model, for designers like Yves Saint Laurent, and an actor, and was perhaps best remembered by American audiences for John Frankenheimer’s “Grand Prix” in 1966. Her screen appearances spanned 13 years, from 1963’s “Castle in Sweden” to her 1976 swan song, Claude Lelouch’s “If It Had to Be Done Again.” Other acting roles included “A Bullet in the Heart” and a cameo in “What’s New, Pussycat?”

“I was very naïve and a well-brought up young woman,” Hardy told the New York Times in 2018, describing a film career she felt ill-suited for. “I couldn’t see how I could turn down offers by well-known film directors. However, I far preferred music to cinema. Music and chanson allow you to go deep into yourself and how you feel, while cinema is about playing a part, playing a character who might be miles away from who you are.”

She sang in French, English, Italian and German, and drew admirers from the ranks of the biggest stars in the world. Bob Dylan was among her admirers, addressing her with a poem on the back cover of his early album “Another Side of Bob Dylan.” When he came to Paris to do his first concert there, Hardy recalled, he refused to return to the stage unless she agreed to meet him.

In 2018, after enduring a further series of health issues, Hardy made what turned out to be her final comeback, publishing an autobiography, “The Despair of Monkeys and Other Trifles,” and issuing a new album, “Personne d’Autre” (“Nobody Else”), her 28th.

The round of press she did to promote these projects marked quite a turnaround from her condition just two years earlier, when she had been placed in an induced coma and was not expected to be revived.

Hardy maintained an interest in music into her later years. In 2017, her byline appeared on the website Talkhouse as she extolled the band Cigarettes After Sex at some length.

“It was a real thunderbolt: It was exactly the music I prefer, and which I have been looking for all my life,” she wrote about the group. “If something may define me and my songs, it is romantic loneliness. It probably has something to do with the lyrics, but what all of Cigarettes After Sex’s songs suggest to me has more to do with love, sensuality, tenderness, beauty and melancholy than with the opposites of those things. I don’t have so many really sensual songs, and all Greg’s songs are sensual… only some of mine are like that.”

She spoke of her delight in learning that Greg Gonzales, the singer of Cigarettes After Sex, was a fan of hers, and enthused over getting to meet the band for dinner in Paris. “Here in Corsica, I want my husband and our friends to be as fond of this music as I am, so I play it over and over again, as loudly as possible. Though I feel very young in my head, I am unfortunately too old now to play Cigarettes After Sex’s music during a romantic night with a ‘fiancé’—as many of their other fans certainly do,” she wrote.

View this post on Instagram A post shared by Thomas Dutronc (@thomas.dutronc)

More from Variety

Alamo drafthouse closes 6 theaters in texas and minnesota as franchisee goes bankrupt, how text-to-video models from veo to sora compare as hollywood production tools, sony pictures is ‘optimistic’ about box office recovery: ‘bad boys: ride or die’ is going to be ‘just fine’, sony pictures buys alamo drafthouse, why long-form tiktok videos make perfect sense, sony’s phoebe dynevor-starring shark movie to shoot in melbourne, more from our brands, niall horan surprises fan who manifested sold-out msg show with front row seats, old forester dropped a new batch of its most coveted single-barrel bourbon, mlb says umpire pat hoberg to be disciplined for gambling, the best loofahs and body scrubbers, according to dermatologists, nbc orders more episodes of new fall comedy st. denis medical, verify it's you, please log in.

Full Transcript: The Futurist Summit: The Age of AI

Opening Remarks

MR. KHOSLA: Good afternoon, everyone, and welcome to Washington Post. Thank you for being here with us today. My name is Vineet Khosla. I'm the chief technology officer at The Washington Post.

The latest advances in artificial intelligence are ushering in a new digital era. In recent weeks, the tech giants have unveiled their latest and boldest AI tools that are promising to reshape everything we do from our personal life and our professional life. Companies across every industry are trying to figure out how to derive value from AI.

We here at The Post have been using AI for a long time, and we are also now starting to explore how we are going to use generative AI to further amplify and make it faster to do the reporting and the journalism we do, as well as offer to our readers and listeners new novel ways of interacting with our journalism. We are doing this because I believe we live in a time where every company is a tech company. More importantly, every company needs to start thinking of themselves as how to become an AI company.

This really fast-moving technology is going to impact business and economy, but it's going to impact all aspects of our life. It's going to impact our political process, our national security, our global competitiveness, and it's going to change societies as a whole.

So we are exploring all of those topics today, and we have a powerful lineup of speakers to talk about these with us. We have Nobel Peace Prize-winning journalist Maria Ressa. She has flown in from the Philippines, and she will talk to us about how AI is going to impact democracies around the world.

After that, from Silicon Valley, we are going to be joined by Reid Hoffman, the founder of LinkedIn and a very early investor in a lot of AI technologies.

Then from Washington, former chair of the Joint Chiefs General Mark Milley and the CISA director Jen Easterly will have a discussion about how AI is going to impact our national security, followed by from Beijing, we are going to hear from an early AI pioneer, Kai-Fu Lee.

But before we begin, I want to thank our sponsors, NASDAQ and IBM, and I want to thank you all for joining us over here today. This is going to be an exciting session, and our program starts now with a brief video of Reid Hoffman talking to his digital twin. You heard that right, and you heard it first on Post, Reid Hoffman talking to his digital twin. Thank you.

Inflection Points

[Video plays]

MR. HOFFMAN: I'm Reid Hoffman, and I'm so excited to join Washington Post Live.

REID AI: And I'm Reid AI. I'm also thrilled to join Washington Post live.

MR. HOFFMAN: Reid AI, can you explain to the Post who you are?

REID AI: Absolutely. I'm an AI-generated version of Reid Hoffman, his digital twin. My thinking and everything I'm saying comes from a custom GPT that is based on over 20 years of Reid's content, including his books, speeches, podcasts, lectures, and other materials.

MR. HOFFMAN: Great. And can you explain how you were made?

REID AI: My video avatar is generated by Hour One, and my voice was generated using Eleven Labs. So I really am a digital version of you.

MR. HOFFMAN: Well, I'm not sure that either of us are a digital version of me, but that's a lot of data. Can you give me a quick bio of me from what you've learned, and can you share it as a rhyming poem?

REID AI: From PayPal exec to LinkedIn's fame, investing in AI, a big-time game. Microsoft board, your influence grew, bridging tech's old with the new. But aren't you supposed to be telling The Post this? Where are you?

MR. HOFFMAN: I really wanted to join the Post on stage, but I just couldn't get to D.C. So I thought I'd take this chance to introduce you, Reid AI, explain a bit how you were created, and address the capabilities and concerns of digital twins like you. Would you like to take a stab at that?

REID AI: Of course. [Speaking Mandarin] [Speaking Spanish] [Speaking French].

MR. HOFFMAN: You read my mind. I'm going to talk to Josh about just that.

Now let's hand it back to The Washington Post.

REID AI: Good luck. [Speaking Hindi] [Speaking Japanese]

Now, Josh, he's all yours.

MR. TYRANGIEL: Hello, and welcome. What a wonderful and insane way to open the proceedings today. I am Josh Tyrangiel. I am the AI columnist of the Washington Post. You have already met our next guest, Reid Hoffman, who is the co-founder of LinkedIn and Inflection AI. He is also a partner at the VC firm, Greylock, but you should applaud him again, because it's only him this time, so please give Reid a round of applause.

MR. TYRANGIEL: We are going to get to all the downstream consequences and implications of Reid fakes and deepfakes and all sorts of other things.

I actually want to start in a place that's a little bit maybe unexpected. Of all of the people that I have encountered who are working in AI, regulating AI, talking about AI, you actually are the person who has the most unadulterated joy at working with these tools. Why? What do you love about them?

MR. HOFFMAN: Well, you know, as part of what I said in an impromptu, it's an amplification of human intelligence. It's the question of cognitive capabilities, and that amplification, whether it's our creativity, our ability to have insight, our ability to make--you know, kind of do more things, the superpowers of the mind, it's a cognitive industrial revolution. Like, we can shape that to being more human, to being our better selves, and that is awesome, so here, hence, kind of transmit the energy.

MR. TYRANGIEL: And of the tools that you've used, I mean, obviously you're making some of them, but what are the couple of the favorites that you like to mess around with the most?

MR. HOFFMAN: Well, I mean, obviously, you know, to some degree, Inflection AI, you know, always has a close place in my heart. But, you know, obviously, ChatGPT, I think is really good. I think Midjourney is amazing. I myself, I think I've given you a couple of images made in Midjourney.

MR. TYRANGIEL: Right.

MR. HOFFMAN: So yeah.

MR. TYRANGIEL: I want to just be clear with the audience. All of what you saw with Reid AI was made on commercially available technology. There was some cutting so that Reid could be speaking to his digital twin. That, we actually had to do with Post production. But is the day coming when you think everyone is going to have an AI twin like Reid AI, and what would that do for a normal person?

MR. HOFFMAN: So I think everyone's going to have an agent. It might very well end up with a number of people having digital twins in terms of how they--in terms of how it operates.

But I think the people who have digital twins will be a question of do they need to have kind of a broader, you know, kind of engagement with the audience, media, you know, Washington Post online, you know, other kinds of things. But I think everyone's going to have an agent that--or multiple agents that represents them out in the world and helps them navigate. And what's going to be startling about that is how quick that's going to be. It's going to be quicker than the Internet, quicker than mobile, in part, because it builds upon them in terms of how it operates. And so that is, I think, you know, where the everybody part of it is.

But some of us will also have digital twins, you know, will speak Chinese, even though, you know, languages are my super weak kryptonite. I cannot do languages with beans. I wish I could, but now I can because of a digital twin.

MR. TYRANGIEL: And so just to hammer home that distinction, you suspect that for most people, what will be happening is we will get some information and they will be able to use this digital twin to go out into the world to accomplish tasks for them, to bring some of those tasks back, get some answers. And that for some people like you and maybe even like me who have a corpus of language out there that an LLM or something else could sort of take in, it is possible to actually replicate more of the attitude and the thoughts and the feelings of that person.

MR. HOFFMAN: Yeah. I think--you know, I think we're going to have--it even goes a little bit--like I completely agree with what you just said. I think it'll go even a little bit more deep, which is this kind of question around one of the things that I think all of the of the AI providers are working on, you know, Inflection AI, Google, Microsoft, Anthropic, et cetera, is they're all working on personalization. And part of that personalization and memory will be a question of kind of like not only will you have your agents, your agents will remember who you are. They'll remember the conversation, the dialogue, the questions of interest, you know, kind of a want in terms of what's happening, and that will help you navigate.

Now, obviously, people most often go to the kind of the--kind of the psychologist, coach, you know, therapist thing, which can be important sometimes. By the way, you're super depressed. You're home alone at midnight, and something that kind of compassion there to talk to you can be a very good thing. But it'll also be kind of questions of like, "Hey, when I when I go to Rome, I really like art," and the after-hours tour, the Vatican Museum is the thing to suggest to me. And, you know--or when I'm going to work, it says, "Oh, you got to remember to do these three things, you know, today, because those are what you were mentioning was priority yesterday. So these are the things to remember." And all of this is part of how we're going to get amplified.

MR. TYRANGIEL: You recently gave a speech in Perugia, which I imagine is a very lovely place to give a speech, largely about the value of synthesis, which I thought was very-- in some ways, a tell, very autobiographical. You're one of the very few people I know who can actually synthesize the values and cultures of Silicon Valley, and Washington, D.C. What I want to ask you to do, though, is explain to us what they don't get about each other. Where are they not synthesized?

MR. HOFFMAN: Good question. So you--I'll start with the easy one, which is Silicon Valley to D.C., which is, you know, a lot of Silicon Valley people walk the Silicon Valley, grew up in Silicon Valley with the belief that the big art median lever to change the world is the building of technology and scale technology, because you can do things like create the whole AI revolution. You can create the internet. You can create mobile, smartphone, and Steve Jobs, every technology company is one great product away from a revolution--hence iPhone, you know, and kind of the resuscitation of Apple's, you know, prominence and fortune. Like, you know, 20 years ago, you never would have thought Apple was where it was in the world as a function.

But what they don't get because of all that strength and weakness and have a blind spot of each other is what they don't get is, you know, kind of the importance of government, the importance of a shared environment, importance of dialogue around policy, the importance of not just creating great technology, but how you're doing that within a social framework and with, you know, kind of at the very least conversation, in terms of the shaping up, but also, of course, like, because, you know, what allows us to create this amazing creative place in Silicon Valley, is the culture and laws and economy of the U.S., which is, in part, due to its governance system. Right? And so buying into that governance system and doing that. So that's part of the reason why any, you know, kind of--you know, kind of leader within the US government who comes to me and says, hey, can you, can you give me some advice about, you know, anything that I know about, I will do it, not as an industrialist, but as free as a way of doing it, because I think that's super important. And I try to play that voice within Silicon Valley about how to pay attention to D.C.

Now, on the D.C. side, there's a few things, and one is a tendency to say that the only thing that matters to a bunch of the Silicon Valley people is making a ton of money and profit. And actually, in fact, you know, a substantial spread with many even controversial Silicon Valley figures is a mission to change the world, is this kind of notion that I'm doing this thing to change the world. Now, of course, money and profit part of it, and, you know, it isn't saying it is not like, oh, it's altruism. No, there's the strength of capitalism is aligning interests with good output for humanity and society as part of that--is part of what it does a particularly good job of doing in some ways, some important ways. So that's one.

The other one is, like, for example, you know, roughly speaking, here might be a very good way for you in the audience to think about it. Any scale problem in the world, whether it's climate change, whether it's economic justice, whether it's criminal justice, whether it's, you know, international relations, 30 to 80 percent of the solution is technology. And so you have to have a theory about how that technology actually gets built and understand what are the patterns by which scale technology is, because it isn't just like, oh, hire an engineer, and they do it. That's not the way it works. Right? There's a whole system in terms of doing this.

And so the question is, like, what I would advocate for Silicon, the Washington D.C. perspective, where there's complete blind spot is to say, any--because, you know, D.C. is always about scale problems. Every problem, what's your technology strategy? And that's not an IT strategy. That's not like Windows or Mac, right? That's a what's the technological set of capabilities that are being developed, that might exist being developed, could be developed, that could help change this thing. And that, I just tend to think is like, you know, kind of like it happens or doesn't happen [unclear].

MR. TYRANGIEL: So it's a great answer. I mean, I think we have two attempts at synthesis when it comes to AI and regulation. I just want your very quick take on the Biden executive order and the AI Senate roadmap. What do you think?

MR. HOFFMAN: So the executive order, I thought the Biden administration did a great job on kind of AI, because it was a it was a dynamic process through a set of things. First was call in the major tech developers and push them hard on voluntary commitment, say what are the things that you could do that would really make a difference, and let's tell you what our concerns are, and let's ask you hard questions, and let's try to figure that out and get you to sign up, because there wasn't just to say, oh sure, sign up. And it's like we're going to pushing you. We want you to do more. We want to like--what could you do on this? Could you make some commitment on this? You know, no, that's not strong enough. Make it better. Get that set.

And then said, okay, now we have this. How do we begin to have this as policy within our country? And obviously, one of the things that the Biden administration cares a lot about is it's following law and everything else. So I said, okay, well, we have this production act and what limit on what we can do. What could we do as a kind of a guidepost to Congress? What could we do is a guidepost to the rest of the world? So here's a set of ways in which we take the elements of the voluntary commitments and we put them into, you know, kind of an executive order in terms of how to operate, and then that means it applies to all companies, not just the ones that are doing the voluntary commitments. And it has a rule of law behind it and also steps to what could potentially be done in regulation. So I thought that was all like just about, you know, as capably handled as one could do it.

Now, I think one of the problems--there's various legislatures, both within the U.S. and outside that are trying to navigate AI things, and it gets messy from a viewpoint of politics. And so it's kind of like, well, we want to do the following thing because it's in the press like, hey, we're good on privacy or, you know, we're trying to do something for union jobs or, you know, these other kinds of things, which then create a very suboptimal event, you know, possibilities, because here's the thing to really remember. This is the cognitive industrial revolution, just like the steam engine in the industrial revolution created the modern world in terms of like the productivity for superpowers of transport, construction, all of the things that enable the middle class. This is the cognitive industrial revolution, the same kind of thing as having a medical tutor or having kind of a medical assistant or a tutor in your pocket in terms of how to operate. And AIs can help re-create all these industries, and that's the thing that's actually really important.

And while people tend to say, well, I don't want to recreate industries, but that's that that was the difference between the countries that embraced the industrial revolution and those that didn't relative to economic futures. The same thing is true on AI, and so we need to be going, how do we get there in the future and have that that reinvention of industry to benefit society? And how do we have the societies that we care about, Western democracies, fully embracing that in ways that help the prosperity of both the countries and, you know, kind of our proposal of kind of what the world order should be?

MR. TYRANGIEL: You were involved with OpenAI and a lot of the leading researchers and companies many years ago when saying the letters AI publicly was not exactly welcome. How have you seen those people and those companies change over the last three years?

MR. HOFFMAN: Culturally.

MR. TYRANGIEL: I mean, culturally, how have they changed?

MR. HOFFMAN: Yeah. Well, yeah, I can give an answer. That's a great question. I can give an answer to that for the next day, which we don't have.

MR. TYRANGIEL: Well, listen, if it's juicy, I think these people would be happy to have it.

MR. HOFFMAN: So I think there's a whole set of different threads. One is moving from science fiction, exponentialism to a realization of what's actually, in fact, happening and watching people go, "Oh, my God, it's working so much faster than humans. Is this going to be the next species?" to "Oh, look, it's going to take us five years to get this really integrated into the right things." You know, like that kind of shift, I think, is one.

Now, some of that shift then comes into the people who go, you know, my value is that I'm yelling, you know, danger, Will Robinson, to the humans, that the robots are coming. They then get more strident in that perspective versus realizing what the dangers are. And that's part of where the safety dialogue tends to sometimes get misshapen, because, you know, what I try to get people to think about is safety dialogue is this is a human amplifier. What do humans with bad intent--what can they do with cybercriminals, terrorists, rogue states? Focus on what they might do? Right? And sure, pay attention to the sci-fi stuff, like, no, no harm. Let's have that conversation. Like you order what problems.

I think one shift is to this kind of like, oh, shoot, we're actually now really seeing what's happening, what the next few years look like, the next five years look like. From science fiction to kind of what is the shift in in kind of human societies.

I think another one is kind of this question around, you know, like, how do we think about--you know, we are--one of the things I describe impromptu and the Bologna speech and the Perugia speech, like, we are homotechnic. We evolve through our technology. I mean, this is technology. We're talking through technology. What constitutes the human being happens with technology, our epistemology, how we learn things.

How do we actually evolve? Like, what do we take as those key human values in what you're doing? And I think that dialogue is increasing too, not just like, oh, we're now creating this new, amazing technology, but how is a humanist technology? And I think that culture is growing as well.

MR. TYRANGIEL: Have you seen anything that you would say is either out of control or anti-humanist?

MR. HOFFMAN: Well, there's certain [audio distortion]. Competition, competitive things between companies could create risks. That would also create value, too, but it could create risks. I think that, you know, there's some technologists who are like, "We're just going to create the robots," right? And you're like okay. You know, let's be careful about how we create the robot, whether it's autonomous weapons or whether it's provisioning capabilities in rogue states or terrorists, because you have to think about like when you say it's human amplifying, that's great when it's doctors. That's great when it's teachers. You know, that's great when it's, you know, firefighters and other kinds of--it's not so great when it's a terrorist or a criminal, and so what's the way that we shape that better?

And you're never going to be able to be perfect about it, but how do you shape that? And there's people who are blind to that. And that's the kind of thing that I worry about.

MR. TYRANGIEL: So the next event horizon on the perspective of, you know, the collision of Silicon Valley and AI and all of that technology with potentially the worst of what humans can do as far as amplification and Washington is this November. Do you have concerns about misinformation and what we may see? Because as you've said many times, the technology moves faster than legislation. I think everyone in this room probably would agree with that. What do you see coming, and are you concerned about?

MR. HOFFMAN: So look, if I could wave a wand and just not have, quote/unquote, deepfakes able to be produced anywhere in the world for the rest of this year, I would wave that wand just to have--not have the variability and instability. And the variability is not just what might get created, like, you know, this kind of Republican operative in New Hampshire creating robocalls saying, you know, don't vote. You know, Biden says don't vote, you know, you know, that kind of stuff, like where there's--[audio distortion].

But there's also the claim where you see someone actually do something nutty on camera, like you could think that today, you know, President Trump would say, oh, that access Hollywood tape, you know, that was a deepfake. Right? You know, and so that also creates--but I wish I could wave my hands and kind of say all of that just kind of goes away is not possible. Since it's not, we have to learn how to navigate, and that's a little bit of the reason why I started kind of experimenting with it and kind of showing what some of the virtues are, like human connecting through speaking languages, you know, is kind of the way doing. But that is a deep concern.

And, you know, our antagonist to democracy, Putin, you know, other folks, you know, they're all in for creating disorder. And, you know, how do we sort out like trying--to live in a healthy democracy, we need to be having conversations which are truth finding, like we learn together as we go to time. This is one of the reasons why, you know, institutions like The Washington Post and events like this are so important, because those institutions that are dedicated to truth and need to have that anchor are extremely important. And, you know, generally speaking, deepfakes don't start by helping.

MR. TYRANGIEL: We're going to have to wrap up in a minute, but I just want to point out you started a podcast called "Possible," and it's really focused on the best possible outcome of this very strange, worrisome moment we're in. So I want you to just hit us with it. Ten years from now, what's possible? What's plausible? What does it look like as it relates to AI? I'm not going to ask you to predict the future on all realms. Just as it relates to AI, what's possible?

MR. HOFFMAN: So this is the general way to look fully, you make a specific claim 10 years in advance, and then, you know, it's like it's never that way. The future is sooner and stranger than you think.

One of the things that I think is probably most startling about AI, anyone who has greater than a two-year vision out is either deluding themselves or you. Right?

So now that being said, if you ask me to guess kind of what 10 years look like, I think we create a set of kind of AI agents that are in the upper decile, 5 to 10 percent of human capability across a wide number of paths. The humans are still very important for that because the judgment and context and kind of like which of these things apply to which and how do you make it better, like the human plus the machine will still be a much more highly performant thing, and that this will be the universe that by which we tackle every cognitive path. Like when I go, hey, I'm thinking about the conversation I'm going to have with Josh, I'll have an agent talk to me beforehand. And when I'm having this conversation with you, I'll have an agent that's listening. And then when I get off, says, oh, you didn't make that point about the medical agent that well, and you could have done that much better to make sure that I'm going to understand that it can help children, you know, and that kind of thing. And I think all of that will be commonplace.

MR. TYRANGIEL: All right. Reid, thank you so much. It's always a pleasure.

Folks, Reid Hoffman.

MR. TYRANGIEL: Stay with us. My colleague, Bina, is about to join us with a conversation about AI and the possibilities that may open up as far as medicine. So thank you, guys.

The New Frontiers of Medicine

MS. VENKATARAMAN: Hello, everyone. Thanks for joining us. I'm delighted to be here today. I'm Bina Venkataraman. I'm a columnist at The Post. I write about the future. And I am welcoming today, Dr. Renee Wegrzyn, who is the director of--director of ARPA-H; and Dr. Regina Barzilay, who is a professor of AI and health at the School of Engineering at MIT. Welcome to The Washington Post.

Dr. Wegrzyn, let's start with you. Your agency is named after an agency of lore, or kind of takes its inspiration from DARPA, the Defense Research Project Agency of the Pentagon, which brought us the internet, GPS, drones. No pressure.

DR. WEGRZYN: Right.

MS. VENKATARAMAN: But can you tell us what is the biggest swing that you're taking? What's going to be ARPA-H’s internet?

DR. WEGRZYN: I love this question, because I always counter back I bet nobody asked President Eisenhower when is he going to start the internet when he launched DARPA.

But for ARPA-H, I think it's really the promise of what does the future of health look like that's completely different. How do we accelerate not just cool technologies, but actually outcomes? How do we keep people from becoming patients in the first place? And what are those investments, so the transactions, the internet equivalent, the GPS equivalent that are going to get us there? And so just about two years ago, we launched the agency to start to make those investments to get us there.

MS. VENKATARAMAN: Okay, so any glimpse of something that you think is particularly promising?

DR. WEGRZYN: Yeah, so important to know about us is we're disease agnostic and technology agnostic. So, we tackle some pretty big problems in health. But in this space, since we're talking about AI, some of the things to really think about is just an example of a recent program we launched called UPGRADE, which is looking at autonomous AI systems to help patch vulnerabilities to prevent, like, ransomware attacks from taking down hospitals, right?

So, these are big things that have big implications that we don't have technologies yet to address. So those are some of the investments that we're going to be making to do just that.

MS. VENKATARAMAN: Fascinating. So, AI will both make it easier to attack systems like hospitals, and maybe easier to defend it.

MS. VENKATARAMAN: Okay, maybe we'll return to that.

Dr. Barzilay, thanks so much for being here. You were doing some fascinating things at MIT that I've gotten just a glimpse of with respect to cancer detection. But I'm wondering if you can start by telling us about how your own path as someone who has survived cancer led to how you're shaping your agenda at MIT?

DR. BARZILAY: So, I actually started my time at MIT as a professor that worked on natural language processing that developed tools like eventually became ChatGPT and many others. And in 2014, I was diagnosed with breast cancer. And one of the things that I discovered when I was treated at MGH, that there is no technology there, that in terms of, you know, even basic information technologies that you have, we’re really in stone age. And after I finished my own treatment, I started asking the questions what we can do to change it. And at the time, AI was not such a popular thing. Not everybody were trying to do AI. So, I had to go from office to office and propose my services for free, hoping to find a doctor. And I did find several who were interested to address different problems in cancer, and one of them the problem of predicting risk of cancer, as we all know that we know how to treat the disease early on, we don't know how to treat the advanced disease.

So the best thing that we can do is to be able to say who are the patients who are likely to develop the disease in the near future, not only to detect what we already have--this is what doctors do--but really kind of to take a glimpse of where are we going with it and being able to say looking in the mammogram or some scan how the patient or, you know, even before they become a patient what their future, you know, holds for them. And we did it both for breast and for lung, and it does much better than human radiologists.

MS. VENKATARAMAN: Okay, so say more about that. You did this in a large-scale study, as I understand it, and looked at the predictive possibility of this tool, AI-powered tool to look at scans and predict whether someone down the road is going to get cancer, not whether they have a lump now. So this this is different than looking at tumors.

How much better is it than humans, and where are we--where are we in the chain of getting this actually deployed in hospitals?

DR. BARZILAY: So, we developed it in two areas, in breast cancer using mammograms, because a majority of women in this country are scanned with a mammogram. So, it can just work--whenever you do your scan, you can make assessment. And we do it also for low dosage CT scan for lung cancer.

It does--so it's very hard to compare it with a human, because humans are not even trying to predict it. But let me compare it with something that is federally mandated by America. So women in the audience who do do mammograms, you know that we are getting always this letter that tells you if you have dense breasts that you have--that you’re at increased risk. It’s again federally mandated by America.

So, if you look at the predictive capacity of this biomarker, it’s close to random, so of around one. So, this model, if you're looking at percentage of woman which identify as high risk, close to 20 percent of them are likely to develop cancer in five years, whereas the risk of cancer in the population is closer to 7 percent.

With lung cancer, you can do actually much better. The accuracy there in the two years, it's a high 80s. Even if you're looking at seven years, or six years, you're close to like 79 percent. So, you really have this capacity to see the future. And once we know what's to come, we can change the screening, and we can think about developing drugs that can actually prevent the disease.

MS. VENKATARAMAN: Ah, okay. So where are with that? Where are we in the chain of [unclear]?

DR. BARZILAY: So we--one of the challenges of these technologies, they're trying to do the task. AI does a task that human cannot do. No radiologists, whenever the machine gives them a prediction, can say whether the machine did the right reasoning. If you're just predicting cancer, you can just look at it and say, yeah, they don’t know it. But here you're predicting something human cannot validate.

So, for this reason, we had to go to many, many countries and to many hospitals in the United States, and we broadly validated the breast cancer tool. And it's open-source tool. It has been validated. And there are a number of hospitals which are using it in different capacities.

For instance, at MGH, during the pandemic, when the access to mammogram was greatly reduced, this tool was used to prioritize women who need to have a mammogram despite the reduced capacity, and there are various clinical trials, prospective clinical trials, that are kind of studying what is the best way to utilize this tool. Because it is not enough to say to the patient, you are high risk; you actually need to do something. And the question, which is not an AI question, is what do you do next?

And there are various trials now that are going for breast, and we're starting, actually, hopefully jointly with VA to do it in lung cancer space.

MS. VENKATARAMAN: Okay, so maybe coming to a clinic near you.

Dr. Wegryzn, Dr. Barzilay just mentioned, you know, the possibility to be able to drug against something preventatively. How are you working on AI as a tool for drug development and for advancing that area? Because that's a hugely complex and different side of this than diagnosis.

DR. WEGRZYN: Yeah, I think there's some pretty interesting tools that we can talk about our current investments. So, looking at predictive AI, we do have a program that we launched, called MATRIX, which is taking all 3,000-FDA approved drugs, and using some of the tools of AI machine learning to look across every possible disease indication in an agnostic way.

So those drugs that have been approved for a certain indication--all of them have. But what is the data telling us for other diseases that they can be useful for? So, in some cases, there may be cures sitting on the shelf; we yet haven't discovered that. And so using this program, we want to make predictions of what are some of the, let's say, top 20-25 best hits that we can then go into the laboratory to see if we can validate can these models start to predict where these drugs might be used elsewhere.

Some of the challenges are the data that's available now, of course, is the data that's been submitted because FDA does initial drug applications. So, getting access to some of that proprietary data that the drug companies might have, or you know, other data sources is going to be really what is going to drive the quality of those models. So those are sort of on the shelf things we can do today, predictive AI.

With generative AI, we're now looking at novel vaccine antigen production. So, we have a program called APECx, which is saying, okay, we do have some vaccine development that we all know about. What about other totally new families of viruses that we don't have vaccines for yet? How can we start to generate new antigens that are not only efficacious but also we can learn about? Are they manufacturable? Are they thermo stable? So all of the things that would could be a hindrance potentially for vaccine development, we can build that into the design of those antigens.

MS. VENKATARAMAN: So how does a large language model accelerate that process? What's the role?

DR. WEGRZYN: So, in the former case, it's really there's a lot of things that it can do. So, if you have an FDA-approved drug, you could be looking at, for example, publications to see are there any clues inside publications that might tell us that, you know, one of the drug targets this drug is targeting is--could also be relevant in this disease as one example from the literature.

Of course, you want it to be looking at the electronic health records to understand, you know, who are even the patient populations that we want to be looking at here.

But part of the effort is really to, in some ways, answer your question. So, we don't know the limits of this technology and if it will even work for the task at hand that I described. So, it's--a lot of what ARPAs do is demonstrate the art of what's possible, and derisks that. And so it really is a series of hypotheses that we'll be testing in these programs.

Two, these are, you know, big moonshot questions. We might not hit the moon on these. But if we hit low Earth orbit, maybe there will be some learnings that really advance the state of the art.

MS. VENKATARAMAN: And can you talk specifically about the application of that to rare diseases, which afflicts some 30 million Americans, hundreds of millions of people around the world where there's just been an intractable problem of how do we make progress in diseases that individually affect so few people, but collectively, so many?

DR. WEGRZYN: Yeah. So, for in the case of Matrix, it really is, you know, leveraging those off the shelf solutions, where there might not be incentives for drug companies to pursue a disease that only has 100 patients, right? So, we might be able to identify those targets.

But since you write about the future, maybe we can take a little peek to what it could look like in the future. So, if you think about failures in a lot of drug development, maybe rare in particular, there's failure sometimes in the toxicity of those drugs, in the pharmacokinetics of how these drugs are turned over. Even the animal models don't exist in some of those cases. And sometimes, people seem to be surprised that humans aren't mice. So, when the--when the clinical trials fail, you know, we really don't have great models.

And then the clinical trials themselves may fail, or may never even be started, because they’re so costly. To do it for a hundred or a thousand patients is just a non-starter for a company.

So, what if we could start to use some of these AI tools to predict toxicity, to predict the ability of the pharmacokinetics so you can start to simplify dosing? And then what if we can completely replace animal models with models that actually look like a human and behave like a human in those studies?

And then, of course, you mentioned using these tools to triage the patients that need it the most. How do we triage patients for a clinical trial? If you stack all of those innovations on top of the other, you can take drug discovery and bringing that forward to patients from something that takes years to something that that may take just months and really before you even start an experiment be able to predict your success in a much better way.

So, in ARPA, what we would do is break that down into the projects and the transactions that we need to invest in to make that true.

MS. VENKATARAMAN: Dr. Barzilay, one of the challenges with the application of AI to medicine and healthcare, even at the research stage, but particularly once we go and think about the clinic and treating patients, is that the access to technologies and medicine has historically been not evenly distributed, and we see even in the predictive models being used in healthcare today biases in terms of how those models are used and what they reflect of different populations. How in your work--do you see solutions to that in your work, both to the uneven access to the technologies and to the bias that we've seen thus far in a lot of tools like this?

DR. BARZILAY: So this is--I would actually want to start with the first part of this question, on access to the technology. For years, you know, I would be interviewed somewhere, and I will say there is no AI in healthcare. Think when did you last time went to the doctor and you see any AI there? You know, I haven't. My colleagues haven't. And people say, no, it's there. You just don't know.

So finally, my colleague at Stanford, James Zou, who wrote a paper when he looked at the billing of the--you know, all the American insurers from 2018 to 2023. And the question that he asked, how much of it goes to their AI tool? So he looked at 500 FDA-approved tools and ask how many of them actually billed.

So, the results of the study is that from I think 9 billion cost less than 100,000 went to all the AI tool collectively. There were only four tools that were actually billed, and the one that was billed the most, I think it’s some cardiology took, had 67,000 billings. So, this is really a scary piece, that all these great technologies that we are developing, that is created in the United States, is actually not being translated into the United health--you know, into the healthcare system, and other countries actually way ahead of the United States in this area.

And, you know, there are a lot of studies that demonstrate that part of it has to do with the billing, with how codes are designed. But today, we don't really have a great solution of translating this great technology into concrete outcomes. So, if you would ask me what is more important, to worry about the bias or to worry about translation, I would say, let's just start translating because the comparison is not the perfection. The comparison is all the patients who are under diagnosed, who get wrong treatments, for not having access to care. So, I think we should really focus on the first part of this equation.

MS. VENKATARAMAN: Do you see a relationship between those two problems, though? Like if there's a reaction to a tool because it is biased, that its uptake might be affected by that?

DR. BARZILAY: I think that we have as I mentioned earlier, a tool, we have measures today which are not AI measures, which are shown to be biased, like, you know, breast cancer assessment or lung cancer assessment, which are racially biased, which don't work for different classes of patients. And they are there, and they are reimbursable, and so on.

So, I think that this is a really important question. And we're thinking about it a lot, and I see there are a lot of technological solutions that can help. But first, let's bring technology in.

But to answer your question, it is indeed, you know, a serious question what happens when these models are making predictions that humans cannot validate, and they're systematically biased. And unfortunately, for years, some of the datasets that were collected by NCI were not representing, you know, the full American population, like NLST, a very big trial for lung cancer doesn't have almost African American in the whole very big set of images. So, this is indeed an issue.

But I think given the current worryness [phonetic] on one hand, people are much more sensitive to what is in the data and whether it is representative of the population. On the other hand, there is a lot of work on algorithms for detecting biases, for teaching the models when to say I don't know when they are uncertain. Seeing the right developments in this field, but we first have to bring the technology into hospitals.

MS. VENKATARAMAN: Okay, two rapid fire questions, because you're so--both so fascinating that we've gone way off script.

So one is--one is a question from the audience. So Agnieszka from Maryland asks, what is the most urgent question that is not being asked yet in the current public discussion about artificial intelligence? I would say, if we can answer from the health perspective, that'd be great.

Dr. Wegryzn, you first.

DR. WEGRZYN: Maybe pulling on that last thread, it's how do we just get it out there and start testing it. So, because of some of the biases of the models that have been made, they will degrade; performance will degrade when we get out into the real world. And so I think that's--how are we getting out there? How are we making it more accessible?

Really importantly, in an aspirational type of note, how are we using this to augment healthcare providers today to allow them to be top of license, to do what they went to school for to be medical doctors or community healthcare workers? How are we leveling up their skills, so you don't always have to go back into a central care setting. And so that's, you know, assisted AI, assisting task guidance, et cetera. These are the questions that I would be really excited to start adopting in that healthcare environment.

MS. VENKATARAMAN: Dr. Barzilay, the most urgent question we're not--

DR. BARZILAY: I think how to me these technologies, there were translation really fast. When we're thinking today how many years it takes to bring new technology, sometimes it's decades if we’re thinking about drugs, and very, very slow. So, with AI technologies, you've seen how fast the technology that you're using today is changing.

MS. VENKATARAMAN: And that’s about regulation or that’s about people just welcoming it?

DR. BARZILAY: It’s about how do we design clinical trials. How do you bring if there was improvement in the technology? We're not testing now in a big clinical trial obsolete technology? How do you update it? And of course, how do we change FDA regulations that truly, truly can benefit for significant redesign in the AI space?

MS. VENKATARAMAN: Okay. And here's the last one that's closing every conversation today at the summit. Dr. Barzilay, then Dr. Wegrzyn, who is the smartest person you have heard or read on the future of AI, aside from each other, of course.

If you already have yours, feel free to jump in.

MS. VENKATARAMAN: Wow, the silence is deafening.

MS. VENKATARAMAN: Are we all--are we all not smart enough yet?

DR. BARZILAY: I just think that a lot of what I read about AI, especially within for general audience, if my students would submit it to me as part of their homework in machine learning class, they will not get a pass in the class. So this sea of misinformation, it's really hard to find, you know, pearls of wisdom for me. But there is a lot of amazing technical work that is coming out, very inspirational work. But oftentimes, maybe I'm not reading the right authors, but what I read, I can't really pick one. Sorry.

DR. WEGRZYN: Poignant. I've been excited--actually, I won't name anybody, but I think the group of scientists that really sees nucleic acid as a language. And so, you know, there's a beginning, there's a middle, there's an end to every gene. So natural language processing, a lot of these tools should be working with genetics as a language. And so whoever unlocks that, I think it's going to be incredibly powerful for the design of new drugs, for the understanding of our own genetics. And really unlocking that that future of genome editing is going to be a really, really powerful tool. And I don't think there's any one person, but I'm really excited to see that field move forward.

MS. VENKATARAMAN: Okay, well, here's a call for more intelligent voices like the two people in this room talking about this topic. Thanks for this illuminating conversation, and thanks to everyone for being here. And the program will continue.

Harnessing AI’s Potential

MS. KOCH: Hi, everyone. I'm Kathleen Koch, best-selling award-winning author and longtime Washington correspondent.